The most effective uses of a new technology aren’t always clear. It takes time and lots of trial-and-error for people to converge on successful applications—many of which weren’t even anticipated by the original creators of the technology.

ChatGPT was released in late 2022. Since then, a number of articles have been published describing how best to use this tool. Yet despite relying extensively on GPT (and other Large Language Models) as research subjects, it took a while for me to actually adopt ChatGPT as a tool.

More recently, I’ve benefitted from ChatGPT in a few different ways. The aim of this article is to describe those uses on the off-chance that readers haven’t considered them. Note that I’ll be focusing on ChatGPT specifically, i.e., the chat-bot interface, and not the use of GPT-3 or GPT-4 through OpenAI’s Python API.

ChatGPT as an information-seeking tool

“Information seeking” refers to the process of trying to obtain information about a topic.

That’s a broad definition, so I think it’ll help to focus on a few specific examples.

Learning about a new research domain

Recently, I’ve been interested in learning more about cooking as it relates to human cognition, biology, and culture. Going in, I had this rough idea in my head: what if cognitive scientists studied cuisines the same way we study languages? This could involve identifying structural similarities or “universals” across the world’s foods, analyzing evolutionary histories and the role of cultural diffusion, asking when cooking began, relating culinary features to core metabolic or physiological constraints, and so on.

Ultimately, I thought it’d be interesting to conduct original research on this topic, but to get started, I needed to learn what information was already out there. In my experience, this is one of the most challenging parts of research. You have some ideas in your head, but you don’t know what’s already known—and you’re not sure which “search terms” would be most effective in finding it out.

Before I describe how ChatGPT can help with this, it’s instructive to consider how I’d go about this process normally. Generally, when I want to know about a new domain, I tackle the problem in at least two different ways (listed in roughly the order I’d typically follow):

Using Google Search or Google Scholar to identify web-pages or academic articles with related terms (e.g., “cognitive science culinary history”, “culinary universals”, “evolution of cuisine”); once I identify a relevant academic article, I scour its references to find more relevant research in this area.

Identifying an expert in that domain, or a journalist who has written extensively about the area, and asking them questions.

Of these, the latter strategy is generally the most ideal. Human beings, after all, are pretty good at communicating with each other, and the nature of interactive communication allows for more dynamism and flexibility in the information-seeking process. I can explain in broad terms what I’m interested in; the expert can answer my question and/or suggest relevant reading; I can indicate whether the suggested reading is relevant; if their suggestion isn’t relevant, we can iterate on this process until we understand one another.

Of course, if I’m new to a domain, then it’s unlikely I know who the relevant experts are. Thus, the first strategy is often necessary. But while it may seem faster to look something up on Google Scholar, it often involves a lot more work and time. For one, you’ve got to figure out the appropriate “keywords” for the domain you’re interested in. This is, in my experience, not a very intuitive process; academic fields tend to converge on their own idiosyncratic jargon. Additionally, sometimes there’s no academic discipline that fits your research question perfectly, but it’s necessary to cobble together bits and pieces of information from disparate fields. Finally, Google Scholar and Google Search are both document retrieval technologies: you type in a search query, and a series of documents are returned, typically ranked in terms of relevance. But in order to assess their relevance, you’ve got to actually read the documents. This is ultimately essential, but it does take time, and at the early stages of research results in reading lots of irrelevant articles.

This is where ChatGPT comes in. I asked ChatGPT the following question:

I'm interested in the cognitive science of cuisine. Specifically, I'd like to apply some of the frameworks used in studying the cultural evolution of language to study cuisine—how cuisines evolve, how physiology operates as a constraint around cuisine, and so on. Are there good references for this?

ChatGPT went on to recommend a number of books and references, including: Cuisine and Empire (by Rachel Laudan), a book I’d actually heard of before but since forgotten; Neurogastronomy (by Gordon Shepherd); and Cooking, Cuisine, and Class (by Jack Goody). I looked up the descriptions of each book and figured out that Cuisine and Empire was probably most relevant to my overall interests, but that Neurogastronomy would also be useful—so I added both to my reading list.

Independently, I’d been conducting a literature search on Google Scholar, and I’d found a really interesting article called “An evolutionary theory of cuisine” by Solomon Katz. The article was right up my alley, and I wanted to find other articles like it. I asked ChatGPT for help, and it suggested a number of related books, including Catching Fire (by Richard Wrangham). I’m now reading Catching Fire, and it’s exactly what I was looking for. Additionally, because Richard Wrangham is an expert in this area, I’ve looked up his academic research and have benefitted from reading several of his published articles.

I also wanted some more suggestions for research articles, so I asked:

Thanks! Can you recommend several academic papers in particular looking at the bio-cultural interface and how cuisine and cooking technologies evolve to manage basic nutritional needs?

ChatGPT also recommended a few more research articles:

Wrangham, Richard, et al. "Cooking as a biological trait." Comparative Biochemistry and Physiology-Part A: Molecular & Integrative Physiology 136.1 (2003): 35-46. This seminal paper explores the hypothesis that the use of fire for cooking could be a distinctive biological characteristic of Homo sapiens, having significant evolutionary implications in terms of energy availability and allocation.

Carmody, Rachel N., and Richard W. Wrangham. "The energetic significance of cooking." Journal of human evolution 57.4 (2009): 379-391. This paper continues the exploration of cooking's evolutionary importance, focusing on its impact on energy availability from food.

Crittenden, Alyssa N., and Stephanie L. Schnorr. "Current views on hunter-gatherer nutrition and the evolution of the human diet." American Journal of Physical Anthropology 162 (2017): 84-109. This review paper provides an overview of research into the diets of modern hunter-gatherer societies, with implications for understanding the evolution of the human diet.

Note that Wrangham appears as a co-author on two of those papers! I’ve since read the first two, and the third is on my docket.

Now, just a couple of weeks since I first asked ChatGPT for help, I feel like I have a better handle on this research field. I don’t know the answers to all my questions, and I’m obviously not an expert, but importantly, I have a better sense of where to look. It’s possible I would’ve gotten here eventually without the use of ChatGPT, but I think it helped me get here faster.

It’s also worth emphasizing that ChatGPT didn’t replace the approaches I used to rely on. It was most helpful in the initial research stage, i.e., figuring how to even ask the question I wanted to ask. From there, I could seek out and read relevant research articles, figure out new questions to ask, and so on.

The other thing that’s worth noting is that ChatGPT does occasionally generate fake articles or books. In my own experience, this is less common with GPT-4 than GPT-3, and I don’t think the frequency is a huge problem—after all, humans get things wrong too, possibly at a higher rate!—but it’s something to bear in mind. Interestingly, I’ve found that even when it’s wrong, there’s sometimes still information there; for example, perhaps the suggested research article doesn’t exist, but the authors do, and have written related articles.

Asking for author recommendations

I’m a big fan of reading plays. I love the plays of Annie Baker in particular, but I also really enjoy the work of Eugene O’Neill, Dominique Morisseau, Henrik Ibsen, and others.

As I’ve written before, obtaining recommendations (whether for music, films, books, or plays) is a hard problem. This is the central task of recommender systems, which typically work by:

looking at which things you say you like;

identifying people who say they like similar things;

suggesting other things those people like but that you haven’t seen yet.

This works reasonably well for some purposes, but one problem is that automated recommender systems don’t explain why they’re recommending something. Some of them do indicate what their recommendation is based on (“Because you liked X…”), but it’s not clear what similarities there are between X (the thing you liked) and Y (the thing they’re recommending).

I was curious whether ChatGPT could help, so I asked:

Can you recommend 3 modern playwrights whom I'd like if I like Annie Baker or August Wilson?

ChatGPT suggested Dominique Morisseau, Lynn Nottage, and Branden Jacobs-Jenkins. I noted that I like Morisseau but that I was looking for something more like Annie Baker in particular:

Are these similar to Annie Baker? I'd like more in those vein—somewhat funny, with realistic depictions of human interactions, that also aren't too didactic.

ChatGPT responded with these suggestions:

Stephen Karam: Karam's play "The Humans" won the Tony Award for Best Play in 2016 and is a great example of his knack for capturing real, intergenerational family dynamics, layered with humor and tenderness. His work tends to delve into the complexities and anxieties of modern life with realism and humor, much like Baker's.

Sarah Ruhl: Ruhl's works often blend the mundane with the mythic, and she uses humor to explore complex themes. Her play "In the Next Room, or the vibrator play" was a finalist for the Pulitzer Prize in 2010. She has a unique voice that combines realism and whimsy in a way that you might appreciate if you like Baker's work.

Will Eno: Eno's writing, such as in "Thom Pain (based on nothing)" and "The Realistic Joneses," features everyday life with an absurdist twist. He is known for his distinctive voice, wry humor, and exploration of big themes through mundane events. He might appeal to you if you appreciate Baker's humorous and philosophical examination of everyday life.

Amy Herzog: Herzog's plays, like "4000 Miles" and "Belleville," are known for their realistic characters and dialogue. Her work often explores personal relationships and generational gaps with subtlety, emotional depth, and humor.

I’d already seen the film adaptation of The Humans (and the play was on my reading list), but I’d never heard of Sarah Ruhl. I looked her up, ordered a compilation of her plays, and read them the following week. I liked them! Overall I’d consider this a win for ChatGPT.

Again, as with learning about a new research domain, I don’t think ChatGPT can really replace alternative mechanisms for obtaining recommendations. I continue to think modern recommender systems (and ChatGPT) underweight the value of serendipity in discovery and experience. I also continue to think there’s an important role for curation and human expertise—I’ll always value the recommendation of a close and trusted friend over an automated system. But that’s not always an option, and ChatGPT helps fill the gap.

ChatGPT as a reading companion

Another interesting and surprising (at least to me) use case of ChatGPT is what I think of as a “reading companion”: a tool that can help elaborate on and provide context for material you’re reading.

I’m not the first person to note this; Tyler Cowen mentioned it in his blog a few months ago. As he points out, it’s related to his other idea of “reading in clusters”. Reading in clusters—i.e., reading multiple books and articles on the same topic in sequence—is helpful for building out an actual knowledge base for a given topic. It’s very easy to forget everything you’ve read from a single book, but reading multiple books helps consolidate that information.

Using ChatGPT as a reading companion functions in a similar way. I’ve been trying this as I read Catching Fire by Richard Wrangham. Specifically, when I come across a topic that I don’t feel I fully understand—or which simply isn’t clear in the text—I try asking ChatGPT to explain it or give further context. This relates to a broader strategy I’m trying to inculcate of asking questions while reading.

For example, after reading about the role of cooking in starch gelatinization, I asked for more details. ChatGPT responded with a step-by-step description of the process:

When a starch granule is heated in water, it begins to absorb the liquid. The heat and water disrupt the molecular structure of the starch, causing the long, semicrystalline amylose and amylopectin molecules that it's composed of to break down.

This breakdown causes the starch granules to swell, and they can expand to many times their original size. This is why a small amount of starch can thicken a large amount of liquid.

As the temperature increases, the granules continue to swell until they reach their maximum capacity and then rupture, releasing amylose molecules into the surrounding liquid.

The released amylose forms a network that traps water and creates a gel, a process known as "gelation". This thickens the liquid, creating the characteristic texture of sauces, gravies, pie fillings, and pudding.

Finally, if the mixture is cooled, the gel can set into a more solid structure, as the amylose molecules form new bonds with each other.

Notably, most of this information was also included in Catching Fire. It’s not like it was entirely new. But some combination of thinking to ask the question in the first and reading a different (though overlapping) description of it on ChatGPT helped me understand the process better. Additionally, ChatGPT’s description pointed out that this is why adding starch can thicken a sauce (see the bolded portion). I asked for clarification on this point, e.g., regarding cheese sauces, and ChatGPT noted that this is the basis of sauces relying on a roux.

Of course, I could’ve gotten this information from Wikipedia as well. There’s a whole page on starch gelatinization! Wikipedia’s also (probably) more likely to be accurate. The downside to Wikipedia is that the information isn’t always as immediately accessible—there’s lots more of it, for one, and it’s not always at the appropriate level of abstraction for the question I’m interested in.

Again, this is where I’d emphasize that ChatGPT is by no means a replacement for other methods of supplementing your reading. An encyclopedia is always going to be useful for this, as will reading additional books and articles on a topic. But the ability of ChatGPT to answer specific, targeted questions does make it (in my view) a useful addition—perhaps primarily because it encourages us to ask those questions while reading in the first place.

ChatGPT as a programming resource

The use of ChatGPT and other LLMs for programming has already been written about extensively. Despite this, I was still surprised by how helpful ChatGPT has been for solving questions that come up in the course of programming.

ChatGPT helps write code

Sometimes, ChatGPT helps by generating code.

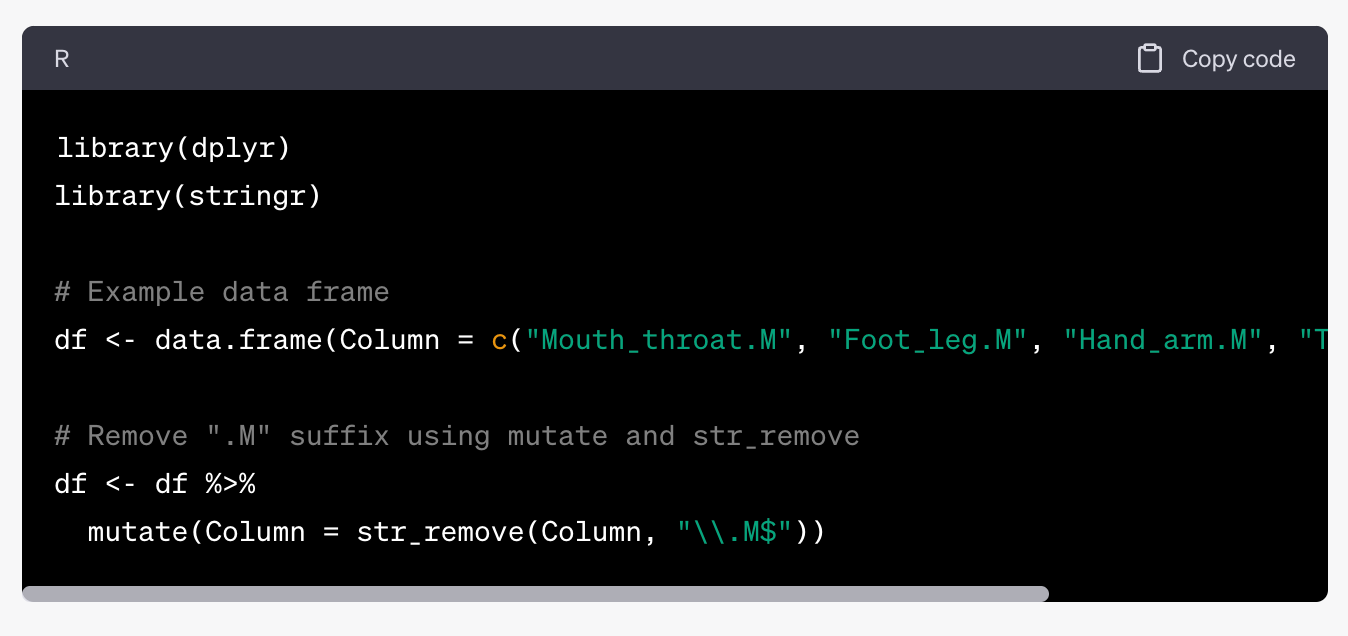

For example, I wanted to modify the values in a specific column of a DataFrame in R:

Me: I have a column in R with the following value names: Mouth_throat.M, Foot_leg.M, Hand_arm.M, Torso.M, Head.M How do I strip the .M from the strings using mutate?

ChatGPT responded with an explanation in text, as well as with R code actually implementing its suggestion:

Sure enough, this worked—and I learned about the str_remove function in R.

Another example came up when I wanted to save figures I’d generated from an .Rmd file. Strangely enough, despite having used R for over five years, I’ve never really settled on an “ideal” strategy for saving figures. I’d typically just write code using ggsave to save specific figures to a “Figures” directory, but this was annoying to write and sometimes caused problems of its own. ChatGPT told me how to modify the YAML metadata of my .Rmd file so each figure I generated would be saved separately.

ChatGPT answers questions about statistics…

Finally, ChatGPT helped me figure out a question relating to statistics. Specifically, I wanted to ask whether two coefficient values from two separate linear regressions were significantly different. I tried asking this on Google Search and couldn’t find anything immediately, so I asked ChatGPT:

Me: I have two coefficient estimates, each with a standard error, from separate models. Is there a way to check whether these estimates are significantly different from *each other*, i.e., where the null hypothesis is they were drawn from the same sampling distribution? For example, say one coefficient is 1, and the other is 1.2. They each have a standard error. Would I run something like a t-test—and how would I do this?

I was most interested in how, if I was to run a t-test, the standard error term would be calculated. ChatGPT suggested the following method (along with code implementing its suggestion):

ChatGPT: The test statistic (t-value) is calculated as the difference between the estimates divided by the square root of the sum of the squared standard errors.

I’d never compared coefficients this way before, so I wanted to find out more information. Interestingly, this is where ChatGPT went off the rails.

…though sometimes digs itself into a hole of apologies

I asked for clarification:

Me: Why do you divide by the square root of the sum of the squared standard errors?

…and for some reason it started apologizing!

ChatGPT: Apologies for the confusion in my previous response. I made an error in the calculation. When comparing two coefficient estimates with their standard errors, you should divide the difference of the estimates by the standard error of the difference, not the square root of the sum of the squared standard errors.

Yet strangely, the code it went on to generate was exactly the same as the code it’d generated before. And even its explanation referenced the calculation I was asking about:

ChatGPT: In this corrected code, the standard error of the difference (

se_diff) is calculated as the square root of the sum of the squared standard errors.

I wasn’t sure what this “standard error of the difference” term meant, so I asked:

Me: What is this standard error of the difference?

It apologized again:

ChatGPT: I apologize for the confusion caused by my previous responses. I misspoke regarding the "standard error of the difference." There is no direct concept of the "standard error of the difference" for comparing two coefficient estimates from separate models.

…and once more, went on to suggest the exact same calculation.

Things continued in this vein for some time. I would ask for clarification, ChatGPT would apologize for its error and then proceed to say the exact same thing, and so on. Eventually I gave up and looked up this formula on Google Search. I found a result on Stats StackExchange, which led me to a published academic paper, which ultimately implemented the exact calculation ChatGPT was suggesting.

Ultimately, I might’ve spent more time trying to figure out what ChatGPT was saying than it would’ve taken if I’d just done a more thorough Google Search from the beginning. I’m also not really sure why ChatGPT entered this “apologetic mode” while simultaneously doubling down on its suggestion. Perhaps my question was tagged as accusatory, and its RLHF training activated the “apology” response? It’d be interesting to run a study to determine how ChatGPT responds to different kinds of clarification questions—those that seem more accusatory (“why would you suggest this”), and those that don’t (“thanks for the suggestion! can you provide some more information…”).

Not yet transformative, but definitely helpful

None of these use cases feel transformative, though they are definitely helpful. In almost every case (except the last one), ChatGPT has improved my experience of some task, whether it’s trying to find out information about a research topic or solve a programming problem. Notably, each of these use cases also functions best as a complement to existing tools.

That also doesn’t entail that GPT or future LLMs won’t be transformative. There are a couple reasons why:

I’ve only been using ChatGPT for a couple months; it’s possible I haven’t identified those transformative use cases yet.

Future versions of ChatGPT or other LLMS may unlock more powerful affordances.

ChatGPT reflects only a fraction of what GPT can be used for. The use cases I’ve been most excited about typically involve creative ways of plugging GPT or other LLMs into some kind of larger software architecture, e.g., using the Python OpenAI API. There may just be a limit to how transformative a chat-bot interface can be, but that doesn’t imply a limit on the underlying LLM technology that’s foundational to the interface.

Of course, the usual cautionary notes apply. ChatGPT sometimes makes things up and its programming “solutions” don’t always work. But that’s (again) why I’ve tried to emphasize throughout that I don’t view ChatGPT as a substitute for other tools, at least for these uses. It’s (usually) worthwhile reading books and research articles yourself—and you should certainly still ask your friends for book recommendations.