Large Language Models (LLMs) like GPT-4 are hard to understand—even by the people who build them: hence the term “black box”.

This fact is surprising to newcomers to the field. Surely, the engineers at OpenAI, Google, and other companies must know how their products work. Isn’t it necessary to understand something before we can build it?

But deep understanding isn’t always a prerequisite to building something that functions at least some of the time. The history of medicine is full of examples; for example, precursors to smallpox vaccination—e.g., “variolation”—were widely practiced throughout China and elsewhere long before the mechanisms were fully understood. Sometimes all that’s required is a loose “structural alignment” between one’s folk model of how something works and how it actually works.

The danger to this incomplete understanding is, of course, the chance of misalignment. If a poorly understood tool works 99% of the time, but fails horribly and unpredictably the other 1% of the time, then it behooves the users of the tool to figure out how and why failure arises. And figuring out “how and why” is part of understanding.

This is the goal of LLM-ology. LLMs are seeing increasingly prevalent usage, but there’s a lot about their behavior and performance that we don’t fully understand. This raises the chance that some LLMs will fail in unpredictable ways—and failure has a long tail of destructive outcomes.

The latest effort in LLM-ology is work by OpenAI using GPT-4 (their latest LLM) to explain GPT-2, an earlier (and smaller) “generation” of LLM. The underlying premise of this work is that perhaps one black box can illuminate another.

Looking inside another black box

Before LLMs hit the public consciousness, much of Artificial Intelligence community was focused on vision models.

Like LLMs, vision models tend to be neural networks. Typically, they are trained either to classify an image (e.g., “a squirrel”) or, more recently, to produce an image from a text (as with DALL-E or Midjourney).

Also like LLMs, these vision models are hard to understand, and despite excellent performance overall, they sometimes fail in unexpected ways. For example, their behavior can be modified through the use of “adversarial attacks”. These attacks involve modifying the pixels in an image in a way that’s (usually) imperceptible to humans, but systematically changes a model’s interpretation of the image. The figure below (taken from Machado et al., 2020) showcases two examples.

This problem has some pretty obvious and frightening implications. If a computer vision model can be fooled in this way, then systems that rely on these models—like self-driving cars, or face recognition—can be manipulated by a bad actor, with potentially tragic results. Consider, for example, the possibility of “masking” a stop sign with an imperceptible (to humans) array of pixels that nonetheless render it invisible—or worse, a 60mph sign—to a self-driving car.

Fortunately, however, researchers have been making good progress on understanding these attacks, as well as how vision models work more generally.

Some of the most interesting work in this domain (in my opinion) comes from Chris Olah1 and others, who worked on peering inside these black boxes and publishing their analyses on a website called Distill.

An approach: feature visualization

Here, the basic research question is: what does this neuron do? There are many neurons in a neural network, and even more weights (or “parameters”) that connect them, and we’d like to understand each of their roles in terms of transforming input to output.

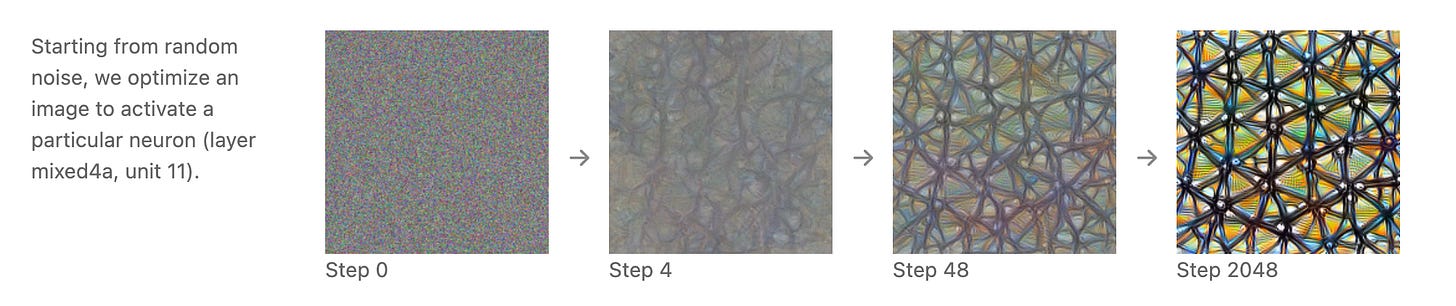

One strategy for understanding the function of individual neurons is called “feature visualization”.

The basic intuition is as follows:

Select a neuron of interest (let’s call it “Neuron X”).

Start with an input image of “noise”, i.e., pixels with random values.

Iteratively change the values of these pixels and see which changes result in more activation of Neuron X.

Continue this process until no more changes can be made, i.e., the image is fully “optimized” activate Neuron X.

The resulting optimized image can be interpreted as a representation of which features Neuron X encodes.

The actual implementation is a little more complicated than this (see their section called “The Enemies of Feature Visualization”).

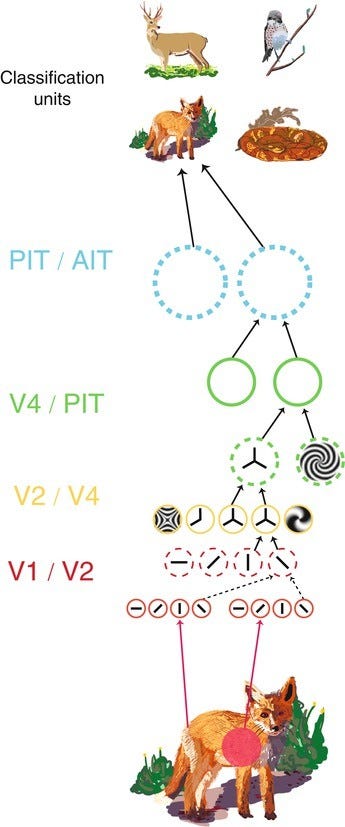

But as I understand it, this is the high-level idea. And it actually has a direct analogy in neurophysiology, which aims to understand the function of the nervous system. Using approaches like this and others, neurophysiology has uncovered a working model of how vision “works”; there’s now good evidence that earlier layers of our visual system encode simple features like the orientation of lines, while later layers begin to piece together.

An example of how this might work is depicted in the figure below; while it’s possible some of the details are wrong, the point is the gist—namely, that visual processing involves “constructing” an image by encoding simple features and then putting them together.

An insight: multimodal neurons

One of the insights gleaned from this feature visualization approach is the discovery of so-called “multimodal neurons”.

A multimodal neuron is defined as one that encodes specific concepts that abstract beyond the specific modality of the input: for example, a multimodal neuron should respond not just to a specific picture of Halle Berry, but to a drawing of Halle Berry and perhaps even the name “Halle Berry”.

This is, yet again, a concept adopted from research on biological visual processing. A famous 2005 paper argued for the existence of specific neurons that encoded concepts like “Jennifer Anniston”. This interpretation—though not without controversy—was very exciting because it suggested that scientists were beginning to crack the code of conceptual abstraction. From the hpaper:

These results suggest an invariant, sparse and explicit code, which might be important in the transformation of complex visual percepts into long-term and more abstract memories.

Using techniques like feature visualization, researchers looked for the existence of multimodal neurons in artificial visual networks, and found evidence consistent with this hypothesis.

I’m not going to describe the whole post in detail, but I really do recommend looking through it, as it’s quite fascinating. They describe neurons that respond systematically to content associated with specific people (e.g., Donald Trump ), specific regions (e.g., Europe), and even specific fictional universes (e.g., the world of Pokémon).

A slow march towards understanding

There’s still a long way to go—and I doubt researchers in this area would ever claim we understand vision models.

But work in this vein does, in my view, move us towards that general direction. Techniques like feature visualization offer a specific, principled methodology for probing individual neurons and identifying more or less what they “do”. I’m hopeful that over time, we’ll come closer to a mechanistic understanding, which is crucial for predicting the behavior of these systems and avoiding potentially catastrophic outcomes.

What about Large Language Models?

A natural question, then, is whether we can apply these same techniques to LLMs like GPT-4. There are at least two challenges to doing so.

First, although both vision models and LLMs are neural networks, vision models tend to be much smaller. For context, GPT-3 has approximately ~175B parameters. In contrast, some of the top-performing models on the ImageNet challenge2 have on the order of ~240M parameters. Even DALL-E 2, one of the most impressive generative image models—which can produce images from natural language descriptions—has only ~3.5B parameters. This is a huge difference in the sheer number of neurons and neural weights that one has to understand.

A second, more subtle problem has to do with the nature of the input. An image is a big matrix of pixels, and each pixel has a continuous value. This means that it’s relatively easy to modulate these values in the iterative way described above: you can turn a knob this way or that way and see how it affects the behavior of an individual neuron.

It’s less clear to me what the equivalent “knob” would be in language. Words, after all, are discrete; and the mapping from the form of a word to its meaning is, in large part, arbitrary. This means that simply modifying individual characters or portions of a token won’t necessarily have a predictable, systematic effect on the behavior of LLM neurons. A pixel can be made lighter or darker, or more or less red. But what is the analogous transformation of a word? Perhaps a “cat” is similar to a “dog”, but what transformations of the word itself will get you there?

Combined, these challenges make LLM-ology a daunting enterprise, and suggest that we may need some clever new techniques.

From one black box to another

This is where the latest work by OpenAI comes in. The post in question is entitled:

Language models can explain neurons in language models.

In the sections below, I’ll explain the general intuitions behind OpenAI’s approach, then discuss some of the limitations—which, to their credit, they are quite open about. For more details, I recommend just reading the post itself.

Broadly, the ostensible benefit of this approach—to the extent that it works—is that it can be done at extremely large scales; this is critical for understanding LLMs, which, as I mentioned, are very large indeed.

How it works

The basic question here is: what does a given neuron do? As in, what kind of information does this neuron represent?

In the post, the authors focus on neurons in GPT-2, an earlier (and smaller) model.

The method for answering this question involves a few steps.

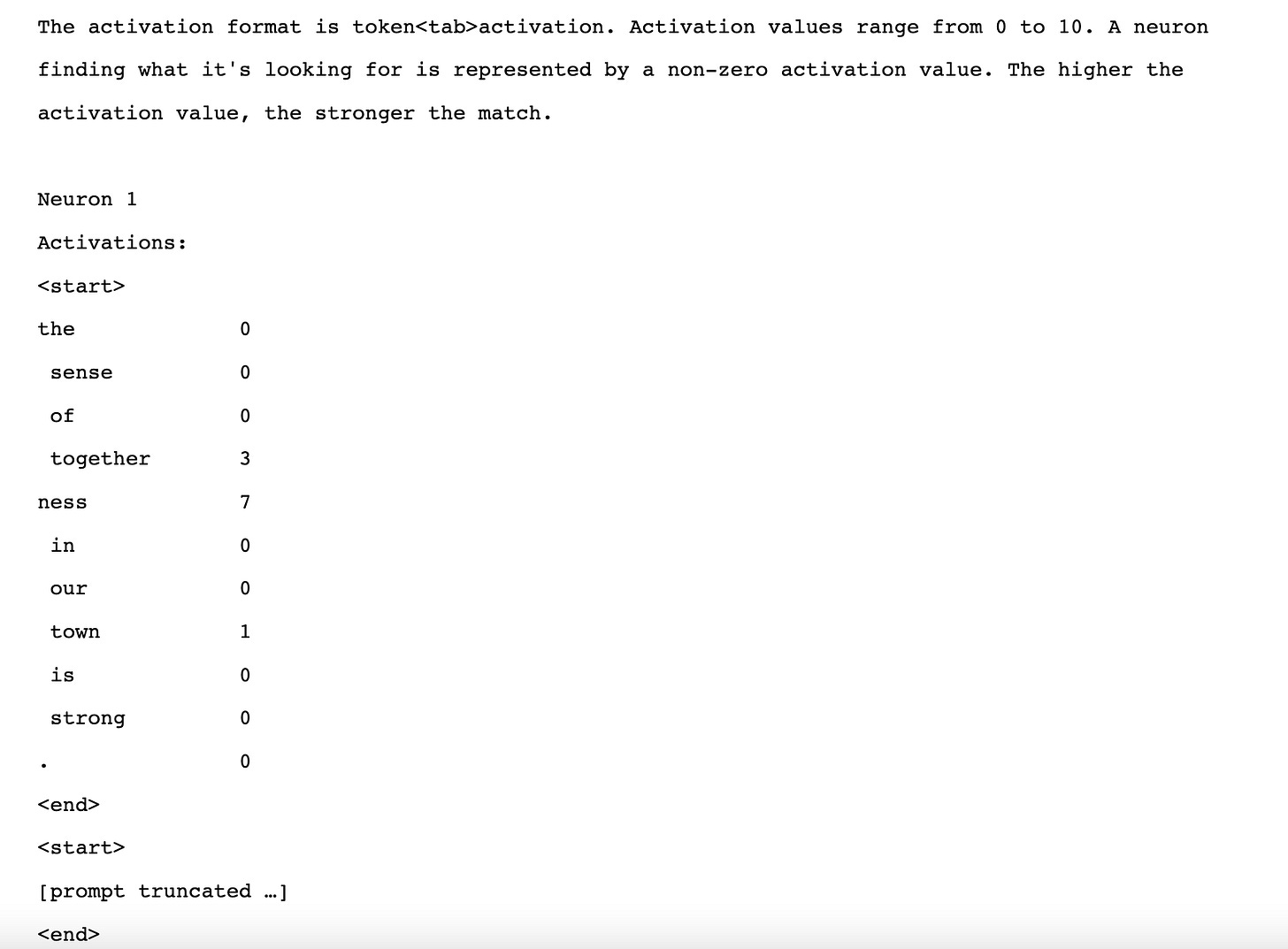

First, identify how a given GPT-2 neuron responds to different tokens in a paragraph of text.

Next, present these token/activation pairings as “data” to GPT-4, and ask GPT-4 to generate an explanation.

Using this explanation, simulate how that same neuron would respond to tokens from another text passage.

Finally, score the success of this simulation: how aligned are the predicted activations with the actual activations of that neuron?

Step 1 involves what the authors call the “subject model”: the model the researchers are trying to understand. In this analysis, GPT-2 is the subject model.

Step 2 involves the “explainer model”: the model the researchers are using to explain and understand the subject model. In this analysis, the researchers use GPT-4 as their explainer model.

And Step 3 involves the “simulator model”: the model they’re using to transform the explanation (Step 2) into predictions (Step 3). Again, they use GPT-4 as their simulator model.

An illustration of what I call Step 2 is depicted below. The highlighted text represents tokens for which a given neuron in GPT-2 is active; the shade of green represents how active (darker green = how active). Based on this input, GPT-4 presents a “guess” as to what that neuron is doing.

A reasonable question at this point is what, exactly, GPT-4 is doing.

Somewhat remarkably, GPT-4 can be prompted to produce an explanation in this manner, using a prompt that explains what the researchers are looking for, as well as an example of what it means for a neuron to be active vs. not active:

I’ve noted this before, but I continue to be impressed at just how flexible the general prompting approach is. In the past, one might’ve done something similar by training a machine learning model to map from some representation of the words to some representation of their activation—i.e., a vector of numbers. Here, GPT-4 is simply presented with a sequence of tokens—strings, essentially—and is able to “adapt” to this input on the basis of the prompt.

This is also, largely, how Step 3 works: using this generated explanation, GPT-4 produces a set of predicted activations for that same neuron on another passage of text.

Scoring its performance (Step 4) is relatively straightforward: the authors try out a few different scoring methods, but the basic intuition is that one is looking for the correlation between predicted behavior and actual behavior. A perfect prediction would mean that the neuron was always active when GPT-4 predicted it would be, and that it was never active when GPT-4 predicted it wouldn’t be.

Using this approach, GPT-4 identified GPT-2 neurons that respond to concepts like “Marvel comics vibes”, “similes”, “subtle last names”, and more.

But does it work?

Is this a viable approach?

One way to answer that question is to look at its empirical performance. How well do GPT-4’s predictions correlate with the actual behavior of neurons in GPT-2?

The authors write:

We found over 1,000 neurons with explanations that scored at least 0.8, meaning that according to GPT-4 they account for most of the neuron's top-activating behavior.

GPT-2 has 307,200 neurons, so this is about .33% of the total number of neurons in the network. That is, the method achieved strong performance for less than 1% of the entire network.

Is that good or bad? I think it depends on where you stand.

Less than 1% is, obviously, a small amount—if that’s the best we can do, it suggests this isn’t really a viable method in practice. Understanding less than 1% of a system is certainly far from complete understanding. It’s also a very expensive approach, so it may represent poor return on investment.

But this is also, importantly, the first implementation of such an approach. As researchers put their heads to the problem, they might identify more effective prompting methods, more effective ways of extracting and presenting neuron activations, and even ways of combining this approach with other LLM-ology methods to unlock additional explanatory power. From that perspective, it could be a promising start.

The more challenging questions, in my view, have to do with the underlying conceptual assumptions of the approach; that’s what I tackle below.

Assumptions and limitations

As I see it, there are at least four potential limitations to this approach as currently implemented—some of which OpenAI acknowledges in its post.

Limitation #1: Maybe neurons aren’t explainable.

It’s possible that the behavior of individual neurons—particularly in larger networks like GPT-4—may simply not be explainable. That is, their behavior may not map onto a natural, human-interpretable partitioning of the space of concepts and functions. Perhaps they’re just the wrong unit of analysis. (This connects to limitation #3 below, which is that perhaps we ought to broaden our lens from individual neurons to circuits of neurons and their function.)

A related problem is that perhaps neurons do encode important features, but those features can’t and won’t even be understood by humans. OpenAI refers to this as the “alien features” problem:

This could happen because language models care about different things, e.g. statistical constructs useful for next-token prediction tasks, or because the model has discovered natural abstractions that humans have yet to discover, e.g. some family of analogous concepts in disparate domains.

This reminds me of an important point I often hear with respect to the brain: the brain is under no obligation to conform to psychological theories. Put another way: our theories of how the mind works may not map cleanly onto how the brain works—the brain, after all, is an ancient biological system whose regions evolved under very different conditions (and for very different purposes) than the lens with which we typically try to understand it would imply.

Limitation #2: Can we infer mechanism from correlation?

This method is essentially a correlational approach. GPT-4 is used to identify potential concepts that co-vary with activation patterns for a given GPT-2 neuron, then predict whether that pattern holds for another set of data.

Correlations are important, but they’re not sufficient for inferring mechanism. A similar issue exists for another LLM-ology technique called “probing”, in which a classifier is trained to identify where in a network certain information (e.g., part-of-speech) can be reliably decoded. The problem with this approach is that even if part-of-speech information can be decoded from, say, layer 3 of BERT, that doesn’t mean that this is what layer 3 is doing. Is the network actually “aware” of part-of-speech information—that is, does this information make a meaningful difference in the behavior of downstream neurons and neural circuits?

Yet another analogy to biological neuroscience can be made here: techniques such as in vivo recording allow researchers to record the behavior of individual neurons while an animal (e.g., a rat) performs some task; this has led to remarkable discoveries such as “place cells”—neurons in the hippocampus that appear to encode the location of a rat in a spatial environment. But this evidence, too, is correlational. To demonstrate that this information is being used by the network, researchers use techniques like optogenetics, which allows them to control the behavior of an individual neuron—e.g., “turning it on or off”—and see how that affects the activity of downstream neurons or even the animal itself.3

Putting this all in context: even if a GPT-2 neuron tends to respond to things that can be described as “Marvel comics vibes”, it’s unclear whether that neuron’s functional role is, in fact, to encode whether something has “Marvel comics vibes”. To test that, researchers would need to manipulate the behavior of the “Marvel comics vibes” neuron and see how it affects the rest of the network.

Limitation #3: Neurons vs. circuits.

A debate in neurophysiology is whether the “right” level of analysis is individual neurons or circuits of neurons. (A neural circuit is a set of neurons sharing some anatomical connectivity and possibly participating in some shared function.) Lots of neurons do indeed seem to do specific, interpretable things (e.g., many cells in primary visual cortex are selective for the “orientation” of inputs). But making claims about higher-order functions, or more complex functions, may require “zooming out” to the level of multiple neurons, i.e., a circuit.

The same question can be raised here.

On the one hand, this can be seen as a hopeful point: if much of what GPT-2 (or GPT-4, etc.) does can only be understood at the level of circuits, then the <1% figure cited above should not be understood as an upper-bound on the interpretability of GPT-2; it just means we’ll need to zoom out to get a better picture of what it does.

But on the other hand, it’s also a serious challenge: identifying “circuits” is no easy task—even with ~300K neurons (let alone ~375B) there’s a vast number of ways in which those neurons can be combined and permuted. The problem can be made more tractable by narrowing the search space somewhat, e.g., ruling out the possibility of neurons in layer 1 and layer 12 as being adjacent “pairs” in a circuit; but it’s still very hard. Neuroscientists have spent decades mapping the anatomy of various animal brains to give us an anatomical basis for positing certain functional circuits, and the identification of relevant neural circuits is far from a finished matter.

Limitation #4:

The researchers used GPT-4 to try to explain GPT-2. GPT-2, notably, is much smaller than GPT-4—and the method still explains <1% of neurons in GPT-2. Also notably, most applications of LLMs we hear about these days use larger models, like GPT-3 or even GPT-4.

Is it plausible that this method could be used to explain these larger models, like GPT-4? Does that mean we’d use GPT-4 to explain itself?

The authors suggest that at some point, we may even want to use a smaller model to explain a larger model—particularly if we’re concerned the larger model is capable of deceiving us. For example, perhaps we will need to use GPT-3 to explain a future GPT-6 to avoid the possibility of GPT-6 giving false explanations of its behavior. But is this a realistic proposition?

I think it’s difficult to say.

One possibly encouraging thing is that smaller models can be reliably trained from larger models to approximate essentially the same function. This is the logic behind concepts like “dark knowledge” and “knowledge distillation”: although a big network seems to be important for learning a function from the raw data, a smaller network can often be trained from the big network once that function has been learned. If that principle applies here as well, it suggests that perhaps the functions performed by individual neurons in GPT-4 could be approximated and explained through observation by GPT-2 (or another such network).

But that’s mostly speculation, and I’d want to see empirical work before I actually felt encouraged by it.

Is the future of LLM-ology done by LLMs?

This all connects to a broader point. Namely, will research in the future—particularly research on LLMs—be conducted by other LLMs? That’s the premise behind some projections regarding rapid improvements in AI. For example, in a recent episode of the 80K podcast, guest Tom Davidson suggests that entire AI labs will be largely run by other AIs, which in turn would accelerate progress in AI development:

By the time that the AIs can do 20% of cognitive tasks in the broader economy, maybe they can already do 40% or 50% of tasks specifically in AI R&D. So they could have already really started accelerating the pace of progress by the time we get to that 20% economic impact threshold.

This recent work by OpenAI feels like a tentative, attempted step in that direction: using LLMs to explain other LLMs.

As I noted above, there are plenty of limitations with this first step. Those limitations may turn out to be insurmountable: perhaps LLM-ology by LLMs—and perhaps LLMs more generally—will be a dead end, research-wise. I think that possibility is always on the table.

But I’ve also learned to be very cautious about underestimating the pace of progress in this domain. I started working in Natural Language Processing (NLP) about ~8 years ago, just after graduating from college. In that time, I’ve seen remarkable improvements in language models. We went from static models like word2vec to contextualized models, like BERT. As recently as 2019, BERT was the state-of-the-art, and practitioners I spoke with were already frustrated by how quickly it surpassed so many long-standing NLP benchmarks—to the point where many new benchmarks were created to “raise the bar”, so to speak. Then came GPT-3 in 2020, ChatGPT in 2022, and GPT-4 in 2023. Now the discussion is not so much about individual NLP benchmarks but about metrics like the bar exam—metrics we use to determine whether individual human beings are certified to perform a particular occupation.

Of course, the path from these improvements to replacing jobs, accelerating research, and more is a long one: the real world is complex and messy and it also depends on things like regulations and government policies. The writer Timothy Lee (FullStackEconomics, UnderstandingAI) has written some excellent pieces recently connecting this basic research to the question of economic and policy impact (e.g., employment, regulation, etc.).

But in terms of the narrower question of whether improvements to LLMs can be used to generate insights into how LLMs work, and whether these insights can in turn generate more powerful LLMs? It seems to me very much possible; what I hope is that humans, collectively, can manage somehow to stay not just in the loop but in the driver’s seat.

The ImageNet challenge involves training a model to classify images in the ImageNet database. For example, such a model should be able to output a label, tag, or caption for a given image (e.g., “a squirrel” or “a cat”).

The neuroscientist Steve Ramirez has done some excellent work in this vein, using optogenetics to inject “false memories” into a mouse.