Humans, LLMs, and the symbol grounding problem (pt. 1)

Examining evidence in favor of an oft-cited criterion for true language understanding.

In this series of posts, I’m exploring the debate around whether Large Language Models (LLMs), such as GPT-3, can be truly said to understand language.

The first post (here) outlined two opposing perspectives on the question, which I call the duck camp view and the axiomatic rejection view. The duck camp view argues that if LLMs behave like they understand language, then they probably understand; the axiomatic rejection view argues that true understanding involves more than simply behavior––in particular, there are certain foundational properties or mechanisms that underlie human language understanding.

The second post (here) argued that for the axiomatic rejection view to have a case, the criteria they cite as prerequisites for language understanding need to apply not only to LLMs but to humans as well. After all, humans are the implicit bar against which we’re measuring LLMs––so if humans don’t display these criteria, it doesn’t seem particularly fair to require them of LLMs.

This current post considers the first criterion: grounding.

The embodied simulation hypothesis.

Where do linguistic symbols (e.g., words, phrases, etc.) get their meaning?

If we define symbols purely in terms of other symbols––as in a dictionary––we quickly run into an infinite regress. This is most plainly illustrated by imagining that you are trying to learn the words of another language using only a dictionary in that language. Those definitions point only to other words, which themselves have definitions that point only to other words, and so on. At some point, we’ll end up defining a word in terms of itself. This puzzle is called the symbol grounding problem, and is often used to support the argument that our understanding of linguistic symbols must be grounded in something more than simply other linguistic symbols.

One popular proposal is that our understanding of language is somehow embodied in our experience of the world. This idea that knowledge is rooted in sensorimotor experience is quite old, and is rooted in the philosophical tradition of Empiricism. One of the clearest articulations of its application to language specifically comes from Carl Wernicke:

The concept of the word “bell,” for example, is formed by the associated memory images of visual, tactual and auditory perceptions. These memory images represent the essential characteristic features of the object, bell. (Wernicke, 1874)

A more modern formulation comes from this 2015 chapter by Ben Bergen, which summarizes the state of scientific knowledge regarding the embodied simulation hypothesis. Bergen describes this hypothesis as follows:

The same neural tissue that people use to perceive in a particular modality or to move particular effectors would also be used in moments not of perception or action but of conception, including language use. (Bergen, 2015, pg. 143).

This embodied simulation hypothesis seems like a natural solution to the symbol grounding problem: “meaning”, here, is simply the process (or result) of simulation.

The hypothesis has some other desirable qualities. For example, it feels quite parsimonious. We already know that the human brain contains regions dedicated to sensorimotor processing, and further, that these regions are in all likelihood evolutionarily older than the development of human language. Embodied simulation, then, reflects a kind of neural exaptation––the brain excels at “recycling” regions originally developed for one purpose for other, newer purposes. As the scientist Elizabeth Bates once put it: “language is a new machine built out of old parts”.

It also feels true to our own experience. Introspection isn’t particularly in vogue as a psychological method these days, but many report subjective experiences consistent with embodied simulation: when we encounter language, many of us construct vivid images of the entities and events described in language.

Consider this excerpt from Ben Bergen’s 2012 book, Louder than Words:

Polar bears have a taste for seal meat, and they like it fresh. So if you’re a polar bear, you’re going to have to figure out how to catch a seal…The polar bear mostly blends in with its icy, snowy surroundings, so it’s already at an advantage over the seal…But seals are quick. Sailors who encountered polar bears in the nineteenth century reported seeing polar bears do something quite clever to increase their chances of a hot meal. According to these early reports, as the bear sneaks up on its prey, it sometimes covers its muzzle with its paw, which allows it to go more or less undetected. Apparently, the polar bear hides its nose.

Now, apparently this “fact” isn’t actually true.

But if you’re anything like me, you probably imagined a vivid scene of a polar bear sliding around the snow, covering its muzzle in the hope of blending in. And further, the fact that a polar bear has to do this seems quite obvious––after all, snow is white, and a polar bear’s nose is black. Yet these properties aren’t themselves mentioned at all in the passage! Your brain filled in the gaps, so to speak––suggesting that you were imagining (or “simulating”) sensorimotor properties of the scene.1

Grounding and LLMs

By now, hopefully it’s clear why this hypothesis is a powerful argument against the idea that LLMs understand language.

Most LLMs, after all, are exposed to linguistic input alone. Their representation of a word boils down to a bunch of numbers that represent that word’s statistical distribution in a large corpus of text (e.g., Wikipedia)––which words it co-occurs with, which other words occur in similar contexts and which words don’t, and so on. In fact, it’s pretty close to the canonical puzzle presented by the symbol grounding problem: LLMs “define” symbols in terms of which other symbols they co-occur with.

(For now, I’m ignoring developments in so-called multi-modal LLMs: these are models that learn not only from a corpus of text, but also from non-linguistic input, such as images, video, or even a simulated physics environment.)

So if embodied simulation is a necessary component of human language comprehension, then it seems fair to reject a priori the claim that LLMs understand language––or at least, that whatever they’re doing is in any way equivalent to what humans are doing.

Evidence for embodied simulation in humans.

What would constitute evidence in support of this hypothesis?

Weak vs. strong embodiment

Before I discuss specific experiments or results, I want to distinguish between weak and strong versions of the hypothesis.

The weak version posits that embodied simulation happens to some extent during language comprehension––perhaps not always, but enough that we can reliably detect and measure it. However, it does not require that this simulation is necessary for comprehension: it might be “epiphenomenal”, in much the same way as exhaust fumes are not required for a car engine to run but occur as a byproduct or “side effect” of other critical processes. This perspective will be important to keep in mind (and it’s one that’s well-articulated in this 2008 article by Mahon & Caramazza).

The strong version, in contrast, claims that simulation is necessary for language comprehension––i.e., it plays a functional role in how we understand language.

Of course, as with every oppositional contrast, the truth might lie somewhere in the middle. For example, perhaps embodied simulation plays a functional role in how children understand language, but as our brains develop and we reach a state of greater developmental maturity, this simulation can be short-circuited by more efficient processes; any “simulation” we observe is thus a kind of epiphenomenal echo.

But the distinction between these hypotheses matters a lot for how we think about LLMs. If the weak version is true, then it seems more plausible that humanlike language comprehension could be achieved by LLMs; but if the strong version is true, then it suggests that LLMs are missing a critical piece of the comprehension puzzle.

There’s evidence that simulation happens.

By now, there are at least two to three decades worth of evidence supporting the weak version of the embodied simulation hypothesis––simulation seems to happen, at least to some degree, under some conditions, during language comprehension.

As Bergen (2015) notes, this evidence falls into a few different categories: some is based on behavioral experiments, and some involves neuro-imaging (e.g., fMRI).

Behavioral evidence.

Much behavioral research on embodied simulation uses a similar paradigm with similar assumptions.

The basic idea is as follows. First, participants are exposed to some linguistic stimulus (e.g., a sentence like He hammered the nail into the floor). Then, at some point––either immediately after, or after a longer delay––they are exposed to a non-linguistic stimulus (e.g., a picture of a nail) about which they must make a judgment. For example, they might be asked: was this object mentioned in the sentence you read before?

The critical manipulation is that some sensorimotor feature of the non-linguistic stimulus (e.g., whether the nail is horizontal or vertical) is either congruent or incongruent with how the sentence describes it to be. Thus, in critical trials––where the correct answer is “yes”, i.e., the object was mentioned previously2––we can ask questions about whether this experimental manipulation is predictive of how a participant responded. For example, if participants are slower or less accurate on incongruent trials, it suggests that these trials were harder for them in some way. And if those trials were harder, it suggests that these participants had activated some kind of sensorimotor feature about the object in question––which, crucially, was not explicitly mentioned in the linguistic stimulus.

Put plainly: are people faster (or more accurate) to verify that a horizontal nail was mentioned in “He hammered the nail into the wall” (vs. “He hammered the nail into the floor”), and faster (or more accurate) to verify that a vertical nail was mentioned in “He hammered the nail into the floor” (vs. “He hammered the nail into the wall”)?3

The short answer is, for the most part, yes. There’s now a large body of experimental evidence suggesting that people simulate various implied features of objects and events, such as their orientation, their shape, their volume, and more. There’s also evidence that the degree of mental simulation interacts with other linguistic features, such as grammatical aspect.

There are a few caveats to mention.

As is to be expected in any large body of research, not every experiment successfully replicates (see, for example, this attempted multi-lab replication of the ACE effect), but much does; for example, a couple years ago, I successfully replicated the results from this 2006 article showing that comprehenders simulate implied shape and orientation.

There’s (as fas as I know) still no positive evidence suggesting that comprehenders simulate the smell of objects or events; one attempt to find evidence achieved null results. (In general, senses like touch, taste, and smell tend to be neglected in much psycholinguistic research, though this is changing.)

Most of the research on this topic (though not all) focuses on language about relatively concrete scenarios––hammers and nails, not truth and justice.

But broadly, my takeaway from the state of research on this topic is: there’s good behavioral evidence that simulation happens, at least for many of the sensory domains researchers have tested. If you’re interested in more details, I recommend Ben Bergen’s book, which provides a great summary of the evidence.

Neuro-imaging evidence.

Another category of evidence comes from research using neuro-imaging tools, such as fMRI.

fMRI allows researchers to identify which regions of the brain experience significant changes in blood oxygenation (called BOLD response), which are assumed to be correlated with significant changes in neural activity in those regions. Although fMRI doesn’t give researchers the degree of granularity as invasive tools like Electrocorticography (ECoG), it allows them to identify broad “regions of interest”.

Importantly, there’s also good evidence for some localization of function in the human brain. Our cortex is divided according to different sensory inputs (visual cortex, auditory cortex, etc.). Further, each of these cortical regions appears to be further divided into sensorimotor “maps”: for example, our motor cortex has sub-regions that correspond to different parts of our body, such as our hands, arms, tongue, feet, and so on.

These two facts put us in a good position to use fMRI to study the embodied simulation hypothesis. Specifically, the embodied simulation hypothesis predicts: encountering language about certain sensorimotor experiences (e.g., kicking vs. licking) will be correlated with changes in brain activity in regions of sensorimotor cortex (e.g., the “feet” part of motor cortex vs. the “tongue” part). Is this true?

Again, the short answer appears to be largely yes. For example, this 2004 article found that reading words about movements involving different body parts (e.g., pick vs. kick vs. lick) involved brain activity that was––while not identical––relatively similar to brain activity during actually executing those movements. There’s more recent evidence as well, such as this 2018 article finding that maintaining action words in working memory was correlated with greater activation in corresponding regions of premotor and supplementary motor cortex.

Also again, there are caveats. For one, the extent to which this sensorimotor activation is observed may be pretty context-dependent. I’m also just personally less familiar with this literature than the behavioral literature, so I’m less aware of any potential holes or replicability issues––but that’s always something to keep in mind.

Regardless, the biggest issue of all is that fMRI––like the behavioral evidence cited above––fundamentally cannot provide evidence that simulation plays a causal, functional role in comprehension.

Simulation: functional or epiphenomenal?

Both types of evidence cited above suggest that simulation happens (at least to some extent) during language comprehension––this supports the weak version of the hypothesis.

This evidence is also consistent with the strong version of the hypothesis: i.e., it’s what the strong version would predict would happen in those tasks.

But importantly, these pieces of evidence are also consistent with the view that simulation is entirely epiphenomenal. Consider the behavioral paradigm of sentence-picture verification I described: it shows that some degree of simulation happened (e.g., of nail’s implied orientation), but it doesn’t show that this simulation was necessary to understand the sentence “He hammered the nail into the wall”. Maybe simulation is part of some optional, elaborative process that comprehenders engage some of the time––like, say, when they’re asked to make strange judgments about pictures of nails––but it’s not part of comprehension itself.4

The evidence, as presented, cannot adjudicate between these two interpretations: is simulation necessary or is it epiphenomenal?

Weaker evidence for a causal role?

For grounding to be a definitive blow against LLMs understanding language, we need to demonstrate that simulation is actually a necessary component of human language understanding.

There’s some evidence consistent with this claim, but––at least to my knowledge––it’s a little spottier.5

It’s fundamentally a harder hypothesis to test: to demonstrate a causal role, we’d need to show that interfering with (or “knocking out”) neural machinery involved in sensorimotor experience also interferes with language comprehension. That is, we should be able to selectively impair how well people understand sentences involve kicking vs. licking by interfering with different sub-regions of motor cortex. This is hard to do, because we can’t ethically just go around inflicting brain damage on people.

Evidence from neuropsychology

One way to demonstrate this is by running psycholinguistics experiments on populations of patients with different kinds of brain damage. It’s still correlational––in that patients are not randomly assigned to different kinds of brain damage––but it’s more convincing of a causal role than fMRI evidence.

The approach follows the basic logic of dissociation studies. If one person has damage to their motor cortex and another person has damage to their visual cortex, the embodied simulation hypothesis predicts that they should have selective impairments: specifically, the person with motor cortex damage should struggle to comprehend6 language about motor actions, and the person with visual cortex damage should struggle to comprehender language about vision or visual events. It's critical that these impairments be selective––if the person just struggles to understand any language input, it might just indicate more general language or even cognitive impairments.

One 2003 paper used this paradigm. The authors considered three different groups: patients with right frontal lobe damage (resulting in motor deficits), patients with right occipital lobe lesions (resulting in visual deficits), and healthy controls. Participants in each group then performed a lexical decision task. In this kind of task, participants are presented with different sequences of letters, some of which are words and some of which are not; researchers measure how quickly and accurately participants respond to the actual words. Importantly, of the critical words, some were predominantly visual-related nouns (e.g., tree), and some were action verbs (e.g., kick).7

The researchers found evidence consistent with embodied simulation:

Patients with motor deficits were less accurate when responding to action verbs (but not visual-related nouns).

Patients with visual deficits were less accurate when responding to visual-related nouns (but not action verbs).

Healthy controls didn’t show any selective impairments.

In other words, the location and type of brain damage was correlated with impairments in understanding specific kinds of language.

This suggests that these brain regions (and the functions they perform) play a causal role in understanding certain kinds of language––exactly what the embodied simulation hypothesis predicts.

Evidence from TMS

Of course, lesion studies are still fundamentally correlational: people are not “randomly assigned” to different kinds of brain damage, so there might be other, uncontrolled differences between patients with motor deficits and those with visual deficits––despite researchers trying their best to “match” characteristics across the groups.

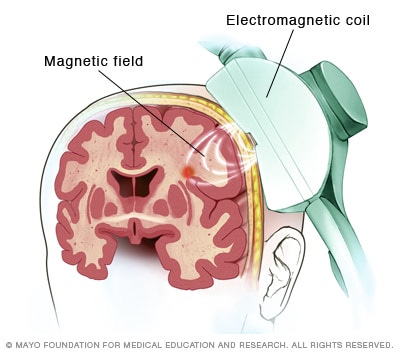

If we could induce a kind of “temporary lesion”, we could then ask whether the site of that lesion predicts specific language understanding deficits. This is exactly what transcranial magnetic stimulation (TMS) purports to do: with TMS, a magnetic pulse is applied to the surface of the scalp (so no invasive surgery is required). This has the effect of disrupting the activity of neural populations in the corresponding cortical area.

I should note that––as far as I know––the mechanics of TMS are still not fully understood. It’s supposedly quite safe, and it’s even used as a treatment for depression in some cases; but it’s unclear (again, at least as far as I know) exactly how this pulse either excites or inhibits neural activity in a particular region. (According to this paper, there’s some evidence that TMS results in transient excitement followed by reduced activity.)

Regardless, the core idea is similar to the lesion studies described above––if disrupting a brain region associated with specific aspects of sensorimotor experience (e.g., the leg vs. arm area of motor cortex) selectively impairs one’s ability to understand language about those aspects of sensorimotor experience as well, it suggests a causal role for those brain regions in language comprehension itself.

That’s the logic underlying this 2005 study. For each participant, the authors applied TMS to several different sub-regions of motor cortex, corresponding to movements involving hands/arms and movements involving feet/legs. Participants then performed a lexical decision task, where the critical words were either about hand/arm movements (e.g., pick) or foot/leg movements (e.g., kick). Here’s what they found (from the abstract):

TMS of hand and leg areas influenced the processing of arm and leg words differentially, as documented by a significant interaction of the factors Stimulation site and Word category. Arm area TMS led to faster arm than leg word responses and the reverse effect, faster lexical decisions on leg than arm words, was present when TMS was applied to leg areas.

That is, the authors observed a dissociation: in this case, applying TMS to a particular region of cortex seems to have facilitated responses to words about specific kinds of actions and not others.8 Because this is an experiment involving random assignment––as opposed to an observational study––we can infer that this is a causal effect.

Setting aside the typical caveats (e.g., whether this replicates), let’s focus on the finding itself. Importantly, this finding is consistent with what the strong version of embodied simulation predicts; it also seems inconsistent with a purely epiphenomenal role for simulation. Interfering with modality-specific parts of the brain also seems to have an effect on language comprehension: concretely, TMS makes participants faster at recognizing some kinds of words than others.

Behavioral interference

A final category of causal evidence comes from what I’ll call “behavioral interference” studies.

The idea here is basically to simulate the effects of TMS without doing TMS. If you make a participant engage in some kind of repetitive motor action (e.g., moving their hand in one direction again and again), that should temporarily “exhaust” the motor circuits responsible for controlling that action. If the strong embodied simulation hypothesis is correct, then understanding language about those actions should be selectively impaired. (This is an example of interference, and it’s a very widely used paradigm in cognitive psychology.)

There are a few studies in this vein. For example, Shebani & Pulvermüller (2013) ask participants to rhythmically tap either their hands or feet; simultaneously, participants are presented with action words involving either hands or feet, and must maintain those words in working memory. The authors found a selective interference effect of the type of interference on word recall. Specifically:

We here report that rhythmic movements of either the hands or the feet lead to a differential impairment of working memory for concordant arm- and leg-related action words, with hand/arm movements predominantly impairing working memory for words used to speak about arm actions and foot/leg movements primarily impairing leg-related word memory.

Again, this is what you’d expect if the strong embodied simulation hypothesis were true. It’s also not what you’d expect if simulation were only epiphenomenal: if simulation plays no role in successful language comprehension, then “exhausting” motor circuits should have no effect on how well or easily people process and remember words about motor actions.

Embodied simulation: where do we stand?

To sum up the evidence reviewed thus far:

There’s good evidence from both behavioral and fMRI studies that processing language about concrete events activates “sensorimotor traces”, which may or may not play a functional role in comprehension.

There’s evidence from neuropsychology that damage to specific brain regions has selective effects on language comprehension, suggesting (though not proving) a causal role for embodied simulation.

There’s also some evidence using both TMS and behavioral interference that different sub-regions of cortex play differential functional roles in processing different kinds of words (e.g., hand words like pick vs. leg words like kick).

Importantly, (3) is the most convincing category of evidence that simulation plays a functional role in language comprehension. (2) has issues with uncontrolled differences across patient populations, and (1) is also consistent with simulation being epiphenomenal.

Does this mean we’ve demonstrated a causal role for embodied simulation? Not quite––here come the caveats.

Counterpoints: issues with the evidence thus far

First, there’s the issue of replication. I don’t know how many of the studies demonstrating a functional role have successfully replicated. For example, this 2022 study failed to replicate the 2013 study I described above using behavioral interference. Further, this 2022 review conducted a p-curve analysis, which is one way to evaluate the strength of evidence for a hypothesis. Their results suggest that many of the positive findings are under-powered––which increases the likelihood of a false positive result––and also that the literature is subject to publication bias, i.e., only “significant” findings are published while null findings never see the light of day.9

Second, even if the experiments are replicable, the stimuli in these experiments are usually fairly constrained. As the authors of this 2018 paper point out, the stimuli often involve concrete scenarios, e.g., someone kicking a ball. But this is actually a fairly biased and unrepresentative sample of how language is used. As those same authors argue, many of the words we use are extremely abstract––they don’t refer to objects or events we can directly perceive or interact with. How could we possibly “simulate” sentences about abstract concepts? Proponents of embodied simulation have their own answers to this (e.g., that we understand those sentences through grounded metaphors), but those answers have their limitations too.10

Third, what exactly does it mean for someone to “comprehend” something? Perhaps this seems like a silly question––they either understand it or they don’t. But consider the 2005 TMS study I referenced above: concretely, the finding is that people are faster to recognize words about hand/arm actions than words about foot/leg actions when they receive TMS to the hand/arm region of motor cortex. This is a relatively narrow task; using it to make an argument about language comprehension more generally relies on at least two assumptions:

The longer it takes someone to recognize a stimulus as a word, the harder it is for them to do that.

The ease of word recognition is an important and interesting component of language comprehension more generally.

Putting these together, we conclude that embodied simulation plays some causal role in language comprehension. But it’s not all-or-none: people, after all, can still recognize foot/leg words as words––it just takes them ~50ms longer. So although word recognition is selectively facilitated or impaired, it’s not obliterated altogether. What does that mean about the degree to which simulation is necessary for comprehension?

This is actually a deep philosophical question I’ll be returning to throughout these posts: what exactly is language comprehension? It’s quite challenging to point to some clear set of criteria––some “end state” of a process with clearly articulated stages and sub-processes––that indicates whether comprehension has occurred or not.

Some of this might have to do with our tendency to conflate language comprehension with consciousness: “understanding” as our subjective experience of interpreting linguistic input.

But perhaps more importantly, it seems to me to be very much a matter of degree. Sometimes, we understand some of the words in a sentence but not others; we understand the literal meaning of an utterance but not it’s sarcastic implication; we understand the content of a paragraph but not how it fits into the bigger picture of an essay or our world knowledge more generally. It’s hard to draw a line in the sand that differentiates True Understanding™ from everything else.

Counter-counterpoints: a quick rejoinder

Those caveats are all about the quality of positive evidence we have for a specific claim. Namely:

Embodied simulation plays a functional role in language comprehension.

It could be that the positive evidence for this claim is very weak. But importantly, that’s distinct from presenting evidence against the claim. As one of the footnotes here also points out: an absence of evidence does not entail evidence of absence. Weak or null evidence for a claim does not mean the claim is false; it means we haven’t disconfirmed whatever the null hypothesis happens to be.

And here, a proponent of embodied simulation might point out: what evidence is there for some alternative theory of language comprehension? And how does that alternative theory address fundamental issues like the symbol grounding problem?

At this point, though, we find ourselves arguing over priors: a priori, how likely do you think the embodied simulation hypothesis is true, as opposed to some alternative theory? The point of scientific experiments is to provide evidence with which we can update those priors. So if we really don’t have much evidence either way, it’s just a matter of opinion.

What about LLMs?

The point of these posts is ultimately to arrive at some kind of conclusion about whether or not LLMs can be said to understand language.

Embodied simulation is one of the “axiomatic” criteria often given to support the claim that LLMs are a priori incapable of True Understanding™. For this criterion to make sense, embodied simulation must play a functional role in human language comprehension. Based on the evidence presented here, I think the evidence in favor of this claim is fairly weak. That doesn’t mean it’s wrong, or that some version of it isn’t true (e.g., maybe simulation is necessary when we’re young, but eventually we can bypass it). It just means that the claim has to rely more on introspection and theoretical arguments––e.g., the symbol grounding problem––than empirical evidence.

And what about evidence to the contrary? Is there evidence that language understanding or world knowledge more generally can emerge in the absence of sensorimotor input or simulation? I’ll explore that topic in the next post.

It’s worth noting that this notion of “simulation” goes even deeper than simply inferring that snow is white and a polar bear’s nose is black. When we comprehend that passage, we’re also constructing an explanation for why this incongruity matters. That is, a wary seal might observe the black nose of a polar bear in an expanse of white snow, and take that opportunity to slip away––depriving the polar bear of its meal.

Typically these experiments have filler trials, in which the correct answer is “no”. Most experiments will have an equal number of these filler trials as critical trials, and will also usually ensure that the sentences/objects they mention are not different from those in the critical trials. (The easiest way to do this is to use the same sentences/objects in different versions of the experiment; in some experiments, no sentence will reference a nail at all, such that the picture of a nail is a filler trial.)

Keeping in mind, of course, that participants are not explicitly instructed to attend to things like the orientation of the pictures or objects.

There’s a separate, deeper question of what comprehension is. I’ll return to this issue later in the series of posts.

I should also note, however, that spotty evidence does not entail that a claim is false. There’s a difference between an absence of positive evidence and evidence of absence, after all.

“Struggle to comprehend” is a little thorny of an issue; this is discussed later in the post.

As far as I can tell, the paper doesn’t actually list examples of their stimuli, so these are just guesses.

Technically, the authors also control for a few other possibilities, including a “sham stimulation” condition (which has no effect). They also find that TMS to the right hemisphere doesn’t produce the same effects––which makes sense, because for most people, language is left-lateralized.

To be fair, this is not at all unique to this particular literature.

This will be discussed more in the rejoinder to this post, which discusses counter-evidence.