On reading the "classics"

The value of academic archaeology

Every scientific field has its foundational texts: pieces of scholarship that shaped a discipline or even created new paradigms. Those same fields also often have a forgotten detritus of sorts: research programs—sometimes proposed by those same foundational authors—lost to the sands of time. Is there any value in revisiting these texts, forgotten or otherwise?

A recent online debate centered around a version of this question. Namely, are contemporary economists and psychologists deficient in their scholarship if they haven’t read Marx or Freud, respectively? I’m not really interested in answering this specific question.1 But the debate it provoked did remind me that I’ve been meaning to write an essay about this broader topic for a while.

I’ll state the problem plainly. If you’re a scientist, your primary job is to produce knowledge. Doing this requires staying abreast of recent empirical findings and research questions, which means there are generally many papers to read and too little time to read them. In this context, what is the role of foundational texts—or forgotten ones—in the education and daily practice of a scientist?

The case against reading the classics

One case against reading the classics begins by conceptualizing a scientific field as, essentially, a matrix of propositional claims about the phenomena of interest. That is, what really matters are the ideas themselves and how they relate to each other: not who originally expressed them, or how or when they expressed. It follows from this that there might be more or less effective ways of conveying an idea—you might also think that an idea can be “refined” over decades of theoretical and empirical research. If this is true, then it’s not clear why one would benefit from reading the original formulation of the idea. Instead, one should read the best formulation of the idea, which might well be in a paper published just last year.

It might help to be specific. If you want to learn or even contribute to the field of calculus, it’s not clear that you really need to read the work of Newton or Leibniz. The same is probably true of astronomy and Galileo or Kepler. The assumption here is that modern scholarship has effectively distilled the findings of these thinkers and that you’re better off building your knowledge base with those distilled versions—and then making sure you stay up to date with the latest theoretical and empirical “updates” in the literature. There’s a sense in which this assumption is almost platonic in nature: there are Ideas, and there are better and worse ways to package them; the best course of action is to learn those Ideas through the best packaging, and then contribute new Ideas.2

That doesn’t mean it’s useless to revisit those older works, of course. It might be enjoyable and interesting to read Newton, and a historian of math or physics would obviously find it essential for their scholarship. But the core argument is that it may not be the most effective use of time when it comes to producing knowledge. It’s more important to stay up to date on the latest findings and theories, which probably means reading contemporary articles published in field-specific journals.

There’s value in the past

In this context, why should a practicing scholar revisit foundational texts—much less forgotten research programs?

I think there are a few potential arguments one could make here. I’ll list them out briefly, and then I’ll focus on the one I find most persuasive and meaningful.

One argument is that reading these older works might actually make you more efficient at producing knowledge. Among psychologists, there’s a running joke that if you need an experiment idea, you should crack open something by William James, as you’re bound to find some inspiration there. Beyond finding new ideas, you might also discover that a research question you’re interested in has already been investigated. Perhaps it’s a dead-end: this can be disappointing, but as the saying goes, it’s much better to spend a week in the library than a year in the lab. Or perhaps the findings were promising, but the lead researcher abandoned the project: that’s a potential lead you could follow up on. A version of this argument is presented in this Works in Progress article on “sleeping beauties” and how to find them.

Whether we’re discussing a foundational or forgotten text, the argument here is roughly the same: there’s some piece of knowledge contained within that text that might help make you a more productive scientist.

I find this argument somewhat persuasive. But if I’m honest, the reason I’m personally attracted to reading older texts isn’t because I suspect they’ll make more productive—at least not in a direct, measurable way. Rather, I derive real pleasure from reading those pieces of scholarship. In part that’s because older scientific writing tends to be more interesting to read: there were fewer formal constraints on how to write, how many papers you needed to cite, and so on. Thus, you’re more likely to come across interesting, novel turns of phrase like this:

I am a man who is half and half. Half of me is half distressed and half confused. Half of me is quite content and clear on where we are going. (Newell, 1973)3

But it’s not just their relative stylistic freedom that appeals to me. Older texts—at least in my field—more likely to produce an argument from “first principles” that’s simultaneously exhaustive and comprehensible, and there’s something exhilarating about reading that on the page.

Above all, I think reading older texts contributes to a feeling of epistemic humility. It’s easy to feel like your own ideas are original, but in my experience that’s actually rarely the case. There really is nothing new under the sun—and while some find that discouraging, it’s also a wonderful feeling to know that the contents of your own mind are in some sense an echo of all that came before. Further, the arguments presented in those texts are almost always more thoughtful than how they are presented in modern times, especially by their opponents. It’s tempting to look back and laugh at the conceptual follies made in the past, but observing the care with which those writers crafted an argument inevitably draws one’s attention to the fact that one is likely making analogous errors.

What I learned from reading Jerry Fodor

Jerry Fodor was an influential philosopher and cognitive scientist. He’s probably best known for his work on modularity, which he introduced and popularized in The Modularity of Mind. The basic notion of a “module” is that we can understand the mind as consisting of a collection of distinct operations or functions, each of which is “encapsulated”: that is, while modules might interact with other modules (much like functions or objects in computer programming can interact with each other), each module is also self-contained. While this idea was by no means new—it goes back at least to the theory of faculty psychology—Fodor cast it in computational terms that aligned better with the dominant Cognitive Science paradigm of the time (1980s). The idea is also an important assumption for evolutionary psychology: if modules are informationally and neurally distinct, it is more plausible that they have each been selected for and can be understood in evolutionary terms.

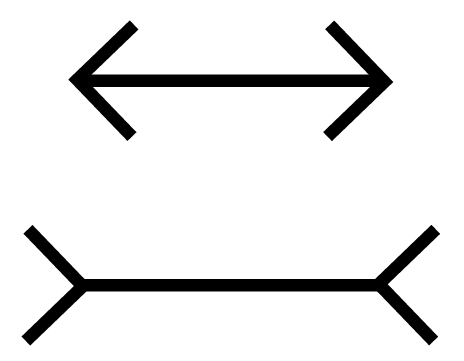

A common argument for modularity in visual perception is the existence of optical illusions. Take the Müller-Lyer illusion depicted below: even knowing the lines (apart from the arrows) are the same length, it’s virtually impossible to perceive them as such. This supports the idea that at least these components of visual perception are modular—or as Fodor might say: impenetrable.4

When I entered graduate school in 2016, I was generally familiar with the notion of modularity—but primarily as a foil to an alternative (and favored) account of the mind and its structure, which emphasized the distributed, integrated nature of mental functions. For example, while a modular view of the mind might claim “language” and “vision” as distinct modules, a more distributed view might claim it’s harder than you’d think to draw a firm boundary—as evidenced by top-down effects of language, context, or attention on visual perception.

I mention all this to set the stage: from an epistemic standpoint, my orientation towards Fodor and the notion of modularity was as a kind of “competing account” to the account I favored. That is, I had a bias. This is obviously not ideal from the standpoint of science, though I’d say it’s (unfortunately) quite common for many entrenched debates in Cognitive Science.

In this case, my bias was almost aesthetic in nature: it was less about the evidential basis for modularity—I was aware there was strong, rigorously collected evidence in favor of it—and more about what I took to be a kind of hubris. It seemed arrogant to me to assume that the way we carved up the mind into distinct “modules” would correspond to the reality of the thing itself. In contrast, the more integrated and distributed view felt like the epistemically humble starting assumption. I think this is important to emphasize because I suspect it’s more common than many researchers let on. We have temperamental or aesthetic biases towards or against different accounts of the phenomenon we’re interested in, and this tends to entrench us into respective camps. That means that even when we encounter empirical data for or against different hypotheses, we are slower to “update” our worldviews than we might otherwise be because of these aesthetic biases.5

Throughout graduate school, I began to feel frustrated by this bias in myself, but I wasn’t sure how to counteract it. It was hard to overcome the assumption that the non-modular view was the more humbler starting place. Then, in my fourth year, I decided somewhat spontaneously to read Fodor’s The Modularity of Mind. It’s very short, and also very well-written, so it didn’t take too long. The experience was probably one of the most impactful intellectual experiences I had in graduate school: not because I changed my mind about the underlying debate, but because it changed my aesthetic orientation. In short, I realized that Fodor’s account can be understood as also beginning from a place of epistemic humility.

Fodor makes the case for modules from first principles. Basically, there are likely certain mental functions for which rapid and efficient computation is especially important. Suppose successful visual perception required “checking in” with every other sensory modality and all conceptual and procedural knowledge we have about the world: that seems like it’d slow things down considerably. Instead, partitioning mental functions into domain-specific modules allows those operations to be carried out efficiently, and in a manner that doesn’t depend on every other aspect of the mind. These modules would have been subject to distinct, specific evolutionary pressures.

Yet crucially, Fodor doesn’t claim all of cognition is modular. He acknowledges that a great deal of cognition is domain-general and impossible to partition into distinct functions; he calls this integrative part of the mind the “central processor” or “central system”. Many aspects of cognition do depend on successful integration of information across modalities or modules—that’s the job of the central processor. It’s even possible that most of cognition is like this. Before reading Fodor, I didn’t realize that he emphasized this point, and it changed how I thought of the argument for modularity. It always seemed a little preposterous to assume that everything could be described as a module, and so it was reassuring to see that Fodor didn’t, in fact, assume that. That was an error on my part that influenced my aesthetic orientation towards the account.

This is where the epistemic humility comes in. Fodor suggests that it’s virtually impossible for us to get a handle on what’s going on in the central processor. If everything is integrated with everything else, it’s not really tractable to design experiments that isolate discrete variables and measure their impact on each other. Even if we did design such an experiment, it would simply not be representative of reality: we’ve artificially partitioned off the space—created modules of our own—and so any “understanding” we got from such an experiment would reflect only the map we’ve created, not the territory itself. Summarizing this claim, Fodor writes (pg. 117):

If we assume that central processes are Quineian and isotropic6, then we ought to predict that certain kinds of problems will emerge when we try to construct psychological theories which simulate such processes or otherwise explain them; specifically, we should predict problems that involve the characterization of nonlocal computational mechanisms. By contrast, such problems should not loom large for theories of psychological modules.

From that perspective, the notion of modularity is a kind of epistemic hope. If the mind isn’t modular at all, the argument is that it might be too hard to understand how it works. If parts of the mind are modular, we should focus our attention on those and try to understand them as best we can.

Like I mentioned above, thinking about modularity this way was a frameshift for me. Before, I thought it seemed arrogant to assume the mind worked according to the typology of functions we devised. After reading Fodor, I realized that there’s a similar arrogance in assuming we could understand an integrated, distributed system like the so-called “central processor”. To be clear, I still tend to think of mental functions as pretty integrated, and temperamentally I tend to be most interested in the most plausibly integrated things—like the relationship between social cognition, grounding, and language use—but I know that such a system may not prove comprehensible to the empirical tools I will nonetheless employ.

Why read the classics?

There’s only so much time in the day. Why spend it reading old things, especially if the ideas those texts contained have become more “refined” over time?

I’m not here to convince you to do anything—if you don’t want to read foundational texts, I think that’s fine. But speaking personally, I’ve found that there are often subtle insights that you get from reading those older works. It’s true that time often works to refine those ideas and distill them into what might be called a “purer” form. But sometimes what we call distillation is actually a kind of flattening of those ideas into symbols that lose some of their initial nuance; we know the map but not the territory. And in my limited experience, it’s worth occasionally taking the time to tread that older ground.

I am not an economist, so the question of whether economists ought to read Marx is not really mine to answer. I am, however, a cognitive scientist, and Freud has clearly been incredibly influential in shaping the trajectory of academic and clinical psychology. Opinions of Freud’s ideas range widely, but in my understanding I think it makes sense to credit him with at least popularizing the notion of the unconscious. While Freudian psychology is generally less relevant to modern cognitive psychology as typically practiced than, say, the work of William James—or the “cognitivists” of the ~1950s—the notion that there’s a vast ocean of cognitive stuff happening outside the realm of our conscious awareness has remained a centerpiece of pretty much all theories of cognition.

It’s worthwhile noting here that I used examples from the domains of math and physics—the two scientific disciplines that are perhaps most associated with a kind of “scientific realist” view, i.e., that we are progressively building up more and more true statements about the nature of the universe or reality.

That’s not to say modern papers are entirely lacking in conceptual flair. As I’ve mentioned before, I’m a big fan of Raji et al. (2021), which opens this way:

In the 1974 Sesame Street children’s storybook Grover and the Everything in the Whole Wide World Museum [Stiles and Wilcox, 1974], the Muppet monster Grover visits a museum claiming to showcase “everything in the whole wide world”. Example objects representing certain categories fill each room. Several categories are arbitrary and subjective, including showrooms for “Things You Find On a Wall” and “The Things that Can Tickle You Room”. Some are oddly specific, such as “The Carrot Room”, while others unhelpfully vague like “The Tall Hall”. When he thinks that he has seen all that is there, Grover comes to a door that is labeled “Everything Else”. He opens the door, only to find himself in the outside world.

Since my focus here is not on optical illusions per se, I’m going to leave aside the longstanding debate around the variance in perception of the illusion and its origins—but it’s an interesting discussion!

My contention here isn’t even that these aesthetic biases are bad. But they are biases and they serve to reduce the relative influence of empirical data on our worldview.

By “Quineian”, Fodor refers to the holistic aspect of the central processor, referring to the philosopher Quine’s notion of an interconnected “web of belief”. By “isotropic”, Fodor is invoking a geometric property (in which a geometric space involves uniformity across all dimensions) to refer to a situation in which every process or function is possibly connected to every other process or function.

So you are confirming that if given the choice between understanding a thing at its root, or operating fluently at its surface — there are many people who not only choose the surface, but believe that choice to be purer?

Are these people equally dismissive of rooted thinking when they encounter it? Is there a way to bypass their bias?

I’ve found that with LLMs, I can arrive at first principles — collide contexts, timescales, and perceptions at warp speed — and then research and verify. What I haven’t yet cracked is how to most effectively transmit or translate what I’ve found. Not in the way I’m accustomed to, as a strategist. Because I’m still contending with this deeper concern: whether rooted, pattern-based thinking is even receivable anymore.