Using neuro-imaging and language models to decode thoughts

Advances, limitations, and implications.

When I was an undergraduate at UC Berkeley, I took a Cognitive Neuroscience course with Psychology Professor Jack Gallant. On the first day of class, Dr. Gallant shared some of the latest research from his lab. Using functional MRI (or “fMRI”), a way to measure patterns of blood flow in the brain, his lab was able to “decode” images and videos they showed to people in the scanner.

You can watch this video on YouTube for an illustration, but the basic idea was this: subjects would watch a video (or see an image) they’d never seen before, while researchers scanned patterns of blood flow in their brains using fMRI. Using this information about what was happening in a subject’s brain, researchers tried to reconstruct, pixel by pixel, each frame of the videos they were watching. The reconstructions were by no means perfect, but they were pretty good.

To put it another way: without knowing which video a subject was watching, researchers had a decent chance of figuring it out from that subject’s brain activity.

This felt remarkable and even futuristic to me at the time. And yet there was nothing mystical about this finding. It relied on concrete advances in neuro-imaging (fMRI) and improvements in machine learning models. The researchers used these models to learn the mapping between video and brain activity (an “encoder”), and then the reverse mapping from brain activity to video (a “decoder”). It also relied, of course, on the assumption that the activity of a person’s visual system contains information about what they’re looking at—an assumption that virtually all cognitive scientists would readily accept.

My own research ended up focusing on language, which took me in a different direction from Dr. Gallant’s work on vision, but I’ve thought about this finding at various times over the years. Then, in May of this year, a new paper was published in Nature Neuroscience with the title:

Semantic reconstruction of continuous language from non-invasive brain recordings

The paper’s senior author was Alex Huth, a former student of Dr. Gallant’s (and now a professor). The other authors were Jerry Tang, Amanda LeBel, and Shailee Jain.

The idea here was similar in spirit, but broadened its focus from vision to language. Specifically, the researchers asked whether they could use an analogous “encoder” and “decoder” pipeline to figure out what their subjects were listening to—and in some cases, what they were thinking.

My goal with this post is to explain the basics of what those researchers actually did, then discuss the potential applications and implications.

Tooling: the basics

In order to understand what the researchers did, we need to know more about the tools they used and how they work. There were a few pieces of crucial technology that the researchers put together: 1) fMRI; 2) language models; and 3) statistics and machine learning. If you’re already familiar with each of those, feel free to skip to the next section.

fMRI tells us (roughly) what’s happening, where

The human brain is very complex. Precise estimates vary, but the human brain contains approximately 100 billion neurons, with many more connections between those neurons (called “synapses”).

Most neuroscientists agree that understanding what these neurons are doing is crucial to understanding cognition. But mapping out the activity of each neuron would require invasive surgery. As I’ve written before, this is why fine-grained neurophysiology relies on “model organisms” like rodents.

When it comes to human subjects, researchers often have to be content with coarser measures of brain activity. These measures all come with trade-offs. Some measures, like electroencephalography (or “EEG”), have pretty good temporal resolution but very poor spatial resolution—i.e., EEG tells you when there are changes in brain activity, but not where1. Other measures, like fMRI, have better spatial resolution, but worse temporal resolution—they tell you (roughly) where there are changes in brain activity, but on a relatively slow temporal scale.

The reason that “where” something happens in the brain might matter is that many neuroscientists believe the brain involves some degree of localization of function. According to this theory, different parts of the brain are differentially involved in different cognitive functions—thus, names like “visual cortex” and “auditory cortex” actually refer to different regions of the brain. The actual extent to which functions are localized in this way is still a matter of debate, but it’s generally accepted that there’s some localization of function.

But fMRI can’t measure brain activity directly. Instead, it measures changes in blood flow within and across brain regions. Specifically, the amount of oxygen content in blood is related to magnetic properties (“spin”) that can be measured with magnetic resonance imaging (or “MRI”). In turn, researchers believe that changes in oxygen content reflect changes in neural activity: when neurons fire, their oxygen is replenished, which causes changes in the oxygenation of blood in that brain region. This is why the fMRI signal is called the blood-oxygen-level-dependent (or “BOLD”) response.

The BOLD response, then, is a proxy for what researchers really want to know. Any proxy measure comes with issues of construct validity, so one major limitation of fMRI is uncertainty about whether we’re really measuring what we want to measure.

Another limitation is fMRI’s poor temporal resolution: typically, fMRI measures changes that happen at the timescale of 2-5 seconds. This sounds fast, but it’s quite slow compared to the speed of the brain: the firing of a neuron is incredibly rapid—usually between 1-2ms. (In contrast, EEG can measure changes in brain activity on the millisecond scale.)

And finally, although fMRI has better spatial resolution than measures like EEG, it’s still quite coarse. The fMRI signal consists of “voxels”—3-dimensional pixels—with each voxel representing a small part of the brain (around 3x3x3 mm). The activity within a given voxel reflects the activity of all the neurons within that region, which can sometimes be tens of thousands of cells.

Nevertheless, fMRI is still incredibly useful for researchers. The information in each voxel can be represented as a number in a big list called a vector, which in turn would represent the activity of all voxels in the brain at a given point in time. Like word vectors (see the section below, and also my recent explainer piece on LLMs with Timothy Lee), these vectors are easy to use in a machine learning pipeline.

Language models help figure out which words are likely

A language model is a tool for representing which words are most likely in a given context.

There are lots of ways to build a language model, but recent years have seen the rise of “large” language models, or LLMs: neural networks with millions or even billions of parameters, trained on billions of word tokens. If you’d like to know how LLMs work in detail, I’d recommend reading this explainer piece.

For our purposes here, there are two things you need to know about LLMs.

First, LLMs can be used to predict the next word in a sequence of words (e.g., “salt and __”). That’s what they’re trained to do, and that’s also how they can be used to generate words in response to a prompt: the LLM calculates which word is most likely to come next, and you can either choose that word or sample from the set of possible words weighted by their respective probability.

Thus, you can think of an LLM as a tool for narrowing the search space of possible upcoming tokens. Suppose you see a sentence like:

I walked to the …

Without knowing anything about the structure of language, it’s hard to know how to select among possible words. And if you know lots of words (e.g., tens of thousands), then selecting among them randomly will probably result in some strange continuations.

A language model works as a filter on that search space. Rather than selecting among words randomly, we can select the words that a language model predicts are most likely in that context. And a language model that’s learned something about the structure of English will “know” that the words above are most likely to be followed by a noun (or an adjective and then a noun), which in turn is likely to be a place or location. You can also imagine how providing more context would give you even more information.

Consider these two sentences:

I needed to cash a check so I walked to the …

I needed to buy some groceries so I walked to the …

In the first sentence, the word “bank” is probably the most likely continuation. In the second, a word like “store” or “market” is probably the most likely.

The authors used an older language model called GPT-1. This was the precursor to the more widely known GPT-4. GPT-1 is smaller and less powerful than GPT-4, but it’s still useful for what the authors needed: a way to narrow down the space of possible next words.

The second thing you need to know about LLMs is that they have multiple layers, and each layer consists of a large vector of numbers representing the input word. Again, I recommend reading our explainer piece for more details on this. But the key insight is that any given word (e.g., “walked”) can be represented as a list of numbers called a vector. The values of those numbers will depend on the position of the word in the sentence, which words came before the word, and of course, the word itself. Those numbers are also sometimes called semantic features.

Encoder/decoder pipelines translate between modalities

The third critical component of this research is what’s called an encoder/decoder pipeline.

It might be helpful to think of this as a tool for “translating” between two different modalities: brain activity (as measured by fMRI) and language. Here, an encoder maps from a vector representing the words a person is listening to (the semantic features) to a vector representing their brain activity (the voxels).

The best way to illustrate this is probably the authors’ original figure showing what they did. Here’s part of Figure 1 from the original article, which shows how:

A language model is used to extract features from the linguistic input.

A mapping is learned from those features to the BOLD representation (i.e., the fMRI voxels).

You might be wondering what it means to “map” from the semantic features to the BOLD space. The mapping is defined by that matrix of weights labeled “Encoding model” in the figure above. The weights themselves are learned by fitting a statistical model to the data—in this case, a variant of linear regression called L2 regression. Each of those weights has been optimized so as to best predict BOLD response from the semantic features.

In the language of probability theory, the encoder represents a probability distribution over neural responses “R”, given the word sequence “S”: P(R|S). (The “|” notation typically means “given” when representing conditional probabilities.)

The decoder has the opposite goal—figuring out a probability distribution over word sequences “S” given a brain response “R”: P(S|R). Using Bayes’ theorem, P(S|R) could in principle be calculated in the following way:

P(S|R) ∝ P(S) * P(R|S)2

That is, the probability over upcoming word sequences, given a particular neural state, is a function of:

P(S): the probability distribution over upcoming word sequences in general, i.e., independent of the neural state.

P(R|S): the probability distribution over neural responses, given different word sequences.

We’ve already figured out how to calculate P(R|S): that’s the encoder’s job. And P(S) can be calculated using the language model. After all, the whole point of a language model is figuring out a probability distribution over upcoming words. Thus, the combination of the encoder and the language model are responsible for the decoder.

Technically, the authors’ implementation is a little more complicated than this. Even with the language model, the space of possible upcoming words—represented by P(S)—is still far too vast. So the authors used a technique called “beam search” to further simplify the search over upcoming words. The details of how beam search works aren’t crucial to understanding the paper, but this was an important step for simplifying the search process.

The full pipeline is illustrated in the figure below, again from the paper. You’ll note that part (a) is the encoder model, i.e., what I already showed above; part (b) represents how the language model (LM) and BOLD responses are used to produce candidate interpretations or “decodings”.

Just to reiterate: the idea behind this pipeline is to create a system for “translating”—first from language to neural activity, and then back from neural activity. The success of that pipeline in turn depends on:

How well the language model represents relevant semantic features.

The fidelity of the neural recordings.

The empirical relationship between the semantic features and the BOLD response, i.e., to what extent one can be predicted from the other.

What the researchers did—and what they found

The researchers invited several3 subjects into the lab. Under the fMRI scanner, each subject listened to 82 15-minute stories from two radio shows: The Moth Radio Hour and Modern Love. Note that this wasn’t all done in a single session. Along with a preliminary session in which a “localizer”4 task was performed, this took place over 16 separate experimental sessions for each subjects. The subjects also took part in a few different conditions, including passive listening (just listening to the audio), imagined speech (trying to recall what they’d heard), and more; I’ll discuss those in more detail when we come to that part of the results.

Does the decoder work?

The first major question was: how well could the researchers decode what words a given subject was listening to? Using the encoder/decoder pipeline above, researchers could compare the target words (what the subject heard) to the decoded words (what the decoder predicted the subject heard).

Measuring whether the decoder “got it right” can be a little tricky. One way to do it is just to count the number of words in common—but this wouldn’t capture scenarios where the decoder captured the “gist” of the input but not the precise words used. Thus, the authors used a few different measures of similarity, which were designed to capture not just lexical overlap but semantic similarity. For all subjects and all measures, the decoder performed better than you’d expect by chance when using data from the entire brain.

The researchers also asked whether the decoder still worked when using data from subsets of the brain, e.g., regions typically associated with speech and language vs. regions typically associated with sensorimotor experience. The decoder (mostly) still performed above chance for these regions, but less well than when using the entire brain. Interestingly, data from areas of the brain typically associated with language and speech didn’t always outperform areas of the brain associated with sensorimotor experience or planning (i.e., prefrontal cortex).

Does the decoder work for “imagined speech”?

One potential application of this technology would be in something called a “brain-computer interface” (or BCI). Such a BCI could ostensibly help patients who’ve suffered from a stroke, or with locked-in syndrome, who can’t produce speech but who are still conscious. If a decoder could reliably figure out what they wanted to say, it would likely provide a lot of relief and comfort to those patients—and also inform the actions of their caregivers.

The success of such a tool hinges on a couple things. But one important factor is whether a decoder can decode imagined speech, as opposed to simply speech that a person is listening to. If it can’t, then it wouldn’t work very well in the BCI context.

To test this, the researchers tested another condition in which subjects had to mentally rehearse the story they’d just heard. They then used the decoder—which was trained on the listening condition—to predict which words the subjects were thinking about at any given point in time. Note that the “ground truth” here is a little harder to measure: for example, it’s possible subjects didn’t perfectly remember which words appeared in a story. Nonetheless, the authors could still compare the original version of a story to the version they’d decoded from the subject’s brain activity.

Here are some examples from Figure 3 of their paper. The “Reference” column contains excerpts of the original story; the “Decoded (covert)” column contains the best guess of the words the subject was imagining at that time.

Qualitatively, I notice a couple things. First, the decoding is by no means perfect, and at times seems to actively reflect a distortion in the original context (“he stopped just in time” vs. “he drove straight up into my lane and tried to ram me”). Again, it’s unclear whether that distortion was caused by an issue with the decoder, or because the subject actually construed the original story that way. But second, the decoder is certainly capturing something like the “gist” of the original context. This is obvious simply by comparing the different stories: each reference story is much closer in its meaning to the decoded version than to the other reference stories. Thus, the decoder seems pretty good at capturing the ballpark meaning of the original story—even when subjects were just imagining the words.

Does the decoder work across modalities?

Another interesting question is whether such a decoder would work, and how well, if the subjects received input in a modality other than language. For example, if subjects watched a movie, would the decoder still be able to “read out” their brain activity in terms of language?

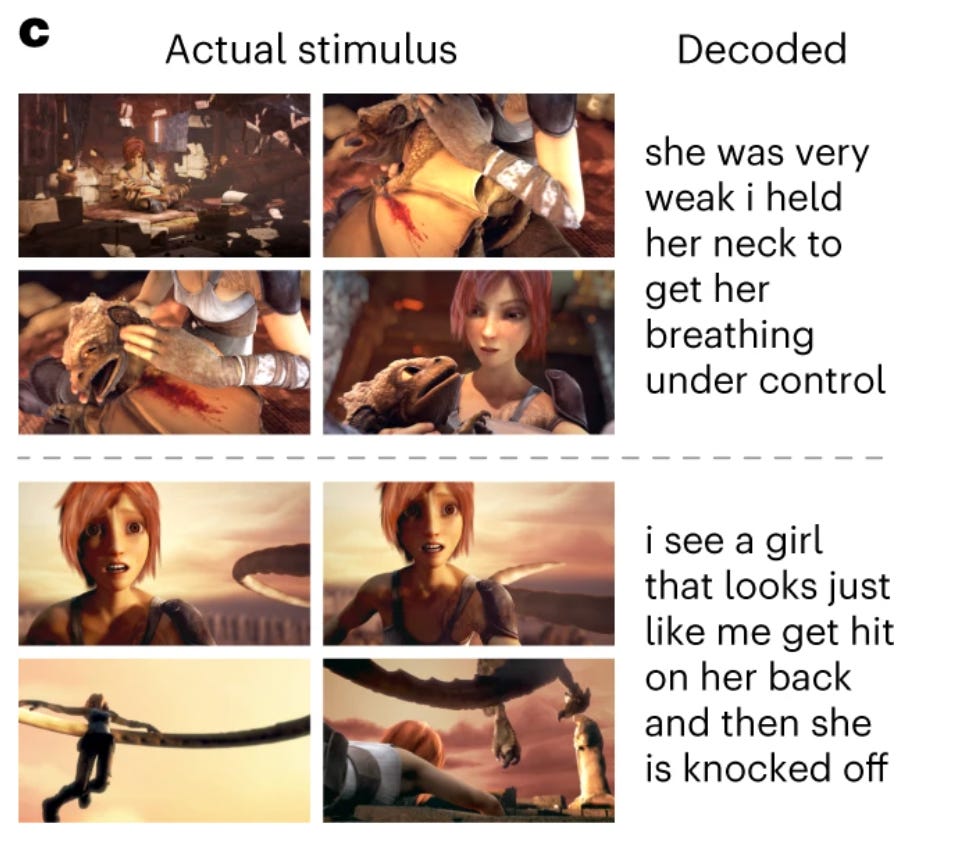

To test this, the researchers showed a movie to the participants, and recorded their brain activity. Then, again using the same decoder model they’d trained before, they produced predictions of the words the subject was thinking about.

Here’s an example of how the decoder did:

It’s harder to quantitatively assess the model’s performance. But qualitatively, once again, it looks like it’s capturing something about the “ballpark” meaning of the movie’s content—quite impressive for a system that was originally trained to map from language to brain activity, and then from brain activity back to language. It also suggests that the encoder/decoder pipeline might be extracting features that are somewhat independent of the specific modality tested—or at least correlated across modalities.

Can subjects resist decoding?

Another, more concerning, application of this technology would be to read someone’s thoughts against their will. The technology obviously isn’t at that point yet—and I’ll discuss more limitations later on—but it does raise the potential for massive invasions of privacy. This is something the authors thought about, and they wanted to address it head-on.

The authors tested this in two ways. First, they asked whether a decoder trained on one person’s brain could be used to predict or decode another person’s brain data; they found that cross-subject performance was very poor. This is crucial because it suggests that accurate performance for a given subject depends on having a decoder model already trained for that subject.5

Second, the authors asked whether subjects could overtly resist decoding. That is, if you try really hard to occupy your thoughts with something else, can you “shut out” the ability of a decoder to figure out what you’re thinking about? The authors tried out three resistance methods: counting (e.g., “count by sevens”), semantic memory (e.g., “name as many animals as you can”), and imagined speech (e.g., “tell a different story”). Meanwhile, the researchers played recorded speech to the participants and asked how well they could decode that speech from their brain activity. Resistance was strongest for semantic memory and imagined speech; counting didn’t really help compared to a passive listening condition.

As the authors write:

This demonstrates that semantic decoding can be consciously resisted in an adversarial scenario

Again, it’s unlikely that this technology will see widespread use anytime soon (if ever), but it’s important to know that at least at its current levels, decoding success can be thwarted by conscious resistance.

Remaining issues, and what this means

Clearly, this approach has come a long way in the past 10 years. Researchers have moved from relatively noisy reconstructions of video frames to predicting which words someone is listening to or even imagining. This technology could have important applications—some of which are unambiguously positive (e.g., helping stroke patients communicate with their loved ones), and others which are much more concerning (e.g., a new form of “enhanced” interrogation).

Yet there are a number of roadblocks lying between here and there.

The first major obstacle—for both applications, in fact—is that human brains are just very different from each other. Brains differ in their gross anatomy, and they also vary in terms of how exactly they respond to various stimuli. This means that (as the authors showed) an encoder/decoder trained on one person’s brain wouldn’t work very well for another person’s brain. That’s a big limitation, though it’s possible that advances in cognitive and neural “phenotyping”—clustering brains into discrete categories as a function of their structure or genetics—could help with this.

Another major obstacle is that fMRI scanners are very big. You can’t exactly lug them around with you, and the whole process of setting them up for a session is time-consuming also expensive. That limits the practicality of this technology as a communicative aid.

All that being said, I also think it’s worthwhile taking a step back and noting how remarkable this research is. As a researcher, it’s sometimes easier and less vulnerable to feel cynical or pessimistic about the state of the field. But when I read this paper, I felt the same excitement I felt back as an undergraduate in Professor Gallant’s class. Despite the limitations I’ve mentioned, I think that what the authors of this paper have achieved is genuinely amazing—and the approach might well become more viable if we see improvements in language models and portable neuro-imaging technology over the years to come.

Though there is extensive work trying to identify the “neural generators” of particular patterns in the EEG signal.

Note that the “∝” symbol is the proportion symbol.

Technically, they recruited seven subjects total, but four of those subjects were used only for evaluating the cross-subject decoders, so the bulk of the empirical data come from the first three subjects.

People all have different brains. Localizer tasks are used to identify which parts of a particular person’s brain respond to which kinds of stimuli.

Notably, this is also a big issue for BCI applications.