About a month ago, I was working on February’s poll for paying subscribers and I decided I wanted some artwork to accompany the post.

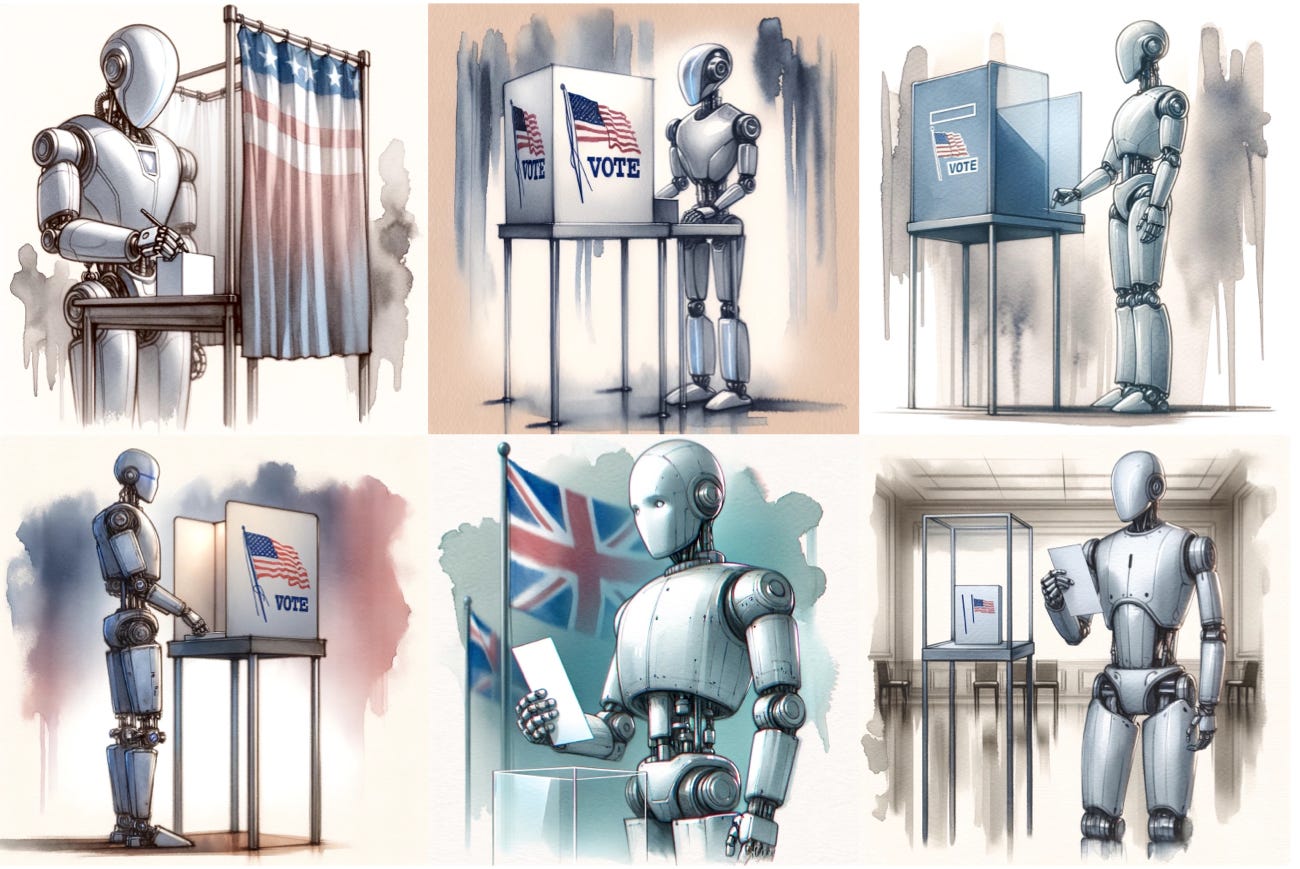

Specifically, I thought it’d be fun to feature an AI-generated image of a robot voting at the polls. I asked ChatGPT to do this, and it produced a nice-looking image that met my specifications. The only problem was that the post featured an American flag, and I had a mild preference for an image that was not specific to the United States. I told ChatGPT that I liked the image, but instructed it to take away the flag (please).

ChatGPT dutifully responded with an image, along with this message:

ChatGPT: Here's the updated watercolor sketch of a robot at the voting booth, now without the flag.

Unfortunately, despite ChatGPT’s claims otherwise, the image still featured an American flag!

Thereafter followed a series of repeated requests to take out the flag (pictured in the multi-panel figure below). In each case, ChatGPT appeared to “recognize” that the previously generated image contained a flag, and insisted that the new image complied with my request to remove said flag. But also in each case, the new image contained a flag (which at one point switched to the flag of the United Kingdom).

Interestingly, ChatGPT could give plausible explanations for its repeated failures, such as difficulties understanding negation. I even asked ChatGPT to generate its own prompt, and it responded as follows (bolding mine):

ChatGPT: To effectively prompt AI image generation tools while avoiding the use of negation, it's beneficial to focus on what you do want in the image rather than what you don't want. This approach helps by providing clear, positive instructions that guide the AI towards the desired elements and away from undesired ones. Here’s an example of how to reframe your request:

Instead of saying, "Generate a watercolor sketch of a robot voting, no flag," you might say, "Generate a watercolor sketch focused solely on a robot holding a ballot paper. The robot should be standing in a plain room with minimalist details. Ensure the scene includes only the robot, the ballot paper, and a simple, unadorned background."

In this revised prompt, the instructions are clear and direct about what should be included, and by specifying the content (robot, ballot, simple background), it implicitly excludes other elements like flags without directly stating it. This method tends to be more effective with current AI capabilities.

Hopeful, I tried this prompt—and ended up with the bottom-right panel of the image above. The flag was smaller but very persistent. It felt a bit like ChatGPT was trolling me.

(In case you’re interested: I eventually tried switching to a new chat window altogether, and this time ChatGPT’s prompt suggestion did work (as you can see in my original post). But it sure took a while to get there!)

Of course, this is just one anecdote and shouldn’t really be considered evidence of much. But the issue of LLMs and negation is not a new one. Other researchers, such as Allyson Ettinger and Gary Marcus, have argued that LLMs consistently fail to understand negation in a humanlike way. So this experience—along with the fact that I was teaching a class on LLMs and cognitive science—made me curious to learn more about the state of the empirical evidence.

“Not”, briefly considered

In language, negation is a mechanism for asserting that some proposition is not the case. For example, the sentence “The cat is on the mat” represents a positive claim about the state of the world, whereas “The cat is not on the mat” represents the opposite of that claim. The latter (negated) sentence doesn’t specify where the cat actually is, but it’s definitely not on the mat.

English has a few ways of expressing negation directly. Sometimes this is done through words (e.g., “not” or “never”), and sometimes it’s accomplished through affixes that attach to a word (e.g., “un-” or “in-”). One traditional way of viewing these words and affixes is as operators, which invert the truth conditions that would otherwise satisfy the following expression.

To make that a little more explicit: “A robin is a bird” would be a true statement about a world in which robins are types of birds, while “A robin is not a bird” would be false in such a world—since the latter statement should have the opposite truth conditions as the former statement.

There are a few things to point out here:

Note the connection to logic or programming. This view of meaning has its roots in truth-conditional semantics, in which the meaning of an utterance is in some sense reducible to its truth conditions. Not everyone subscribes to this view.

There’s an interesting asymmetry between the positive and negative examples I’ve listed. “The cat is on the mat” represents a specific claim about the cat’s location, while “The cat is not on the mat” feels much more open-ended about the actual location of the cat. We’ll return to that asymmetry later.

Related to above: a sentence like “The cat is not on the mat” might still make you think—just for a second—about a cat sitting on a mat. We’ll come back to that too.

The types of negation discussed here are mostly direct. There are more indirect forms of negation too, as this paper makes clear (e.g., the word “infamous” doesn’t mean “not famous”, but rather “famous for the wrong reasons”).

With all that in mind, let’s turn to the evidence on negation in large language models.

Of LLMs, elephants, and robins

About a month ago, Gary Marcus wrote about how the continued inability of image generation tools like DALL-E to understand negation reflects more foundational problems in how those systems work. That post, along with an older one, has some comical examples from Marcus and others involving attempts to generate an image of a room without an elephant in it. As Marcus notes, there’s probably some prompt that will do this successfully—but the point is that ChatGPT reliably fails to follow the user’s instructions, and (he argues) this indicates a lack of deep understanding.

But it’s not just image generators and elephants that pose a problem. A 2020 study by Allyson Ettinger found that BERT, an older language model, also struggled with negation. Ettinger presented BERT with sentences from a 1983 psycholinguistics study that looked something like this:

A robin is a _____.

A robin is not a _____.

Because BERT is a language model, Ettinger could ask about the probability BERT assigned to various completions of those sentences. In the first case, “bird” would be a reasonable (and correct) completion; in the second case, anything other than “bird” would be correct. However, this isn’t what she found—in both cases, BERT assigned a high probability to words that should complete the “true” statement (e.g., “bird”).

For example, the most likely words (as predicted by BERT) for “A robin is not a ____” were: robin, bird, penguin, man, fly. The most likely words for “A hammer is not a ____” were: hammer, weapon, tool, gun, rock. Some of these are correct (a robin is not, indeed, a penguin or a man!), but the highest-probability words were either the subject itself (what is a hammer if not a hammer?) or a taxonomic category to which that word belongs to (e.g., “bird”).

This pattern held for most of the sentences. In general, BERT was pretty good at predicting the correct (true) completion for affirmative sentences (e.g., “A robin is a ____”), but pretty bad at predicting the correct (false) completion for negated sentences (e.g., “A robin is not a ____”).

Notably, Ettinger interprets these results as supporting a view in which LLM predictions are distinct from the mechanisms underlying human language comprehension (bolding mine):

Whereas the function of language processing for humans is to compute meaning and make judgments of truth, language models are trained as predictive models—they will simply leverage the most reliable cues in order to optimize their predictive capacity.

So what about humans?

Earlier, I mentioned that hearing a sentence like “The cat is not on the mat” might—at least for a brief moment—make you imagine a cat sitting on a mat. This raises the question: how do humans respond to sentences like the one Ettinger presented to BERT?

Fortunately, those sentences were adapted from a 1983 study on humans, so comparing them is pretty straightforward. In this study, humans read sentences like “A robin is (not) a bird” while electrical activity from their brain was recorded using EEG. Some sentences were affirmative, and some were negative; additionally, some ended with the associated category (e.g., “bird”), and some with a different category altogether (e.g., “rock”).

Importantly, while techniques like EEG don’t always tell us much about where activity is happening in the brain, they do provide pretty good temporal precision. And in research using EEG on humans, there’s a well-established finding called the “N400”, in which more unexpected or more surprising words evoke a more negative electrical amplitude about 300-500 milliseconds after presentation. Thus, the N400 can be used as a window into what things people find surprising, when.

In this study, the authors looked at the relative size of the N400 for a few different kinds of sentences:

Affirmative + True: A robin is a bird.

Negated + False: A robin is not a bird.

Affirmative + False: A robin is a rock.

Negated + True: A robin is not a rock.

Unsurprisingly, for affirmative sentences, they found a larger N400 (more “surprise”) when the sentence was false (“A robin is a rock”) than when it was true (“A robin is a bird”). But for negative sentences, they found the opposite pattern. This time, the participants had a larger N400 for the true statements (“A robin is not a rock”) than the false statements (“A robin is not a bird”).

As the authors note, this suggests that initial stages of processing for negated propositions doesn’t necessarily engage deeply with the truth conditions of the proposition. Rather, people seem to rely more on associative relationships (“robin” and “bird” are more related than “robin” and “rock”), which themselves could be rooted in world knowledge or even the statistics of language itself.

This is also consistent with the intuition I described earlier. When I tell you not to think of an elephant, you probably think—if only for a moment—about an elephant. Does this mean BERT’s acting like a human after all?

Two modes?

On the one hand, humans seem to rely on “cheap tricks” during language comprehension, which could be compared to BERT’s reliance on statistical cues. On the other hand, humans are clearly capable of understanding the truth conditions of a sentence like “A robin is not a bird”. After all, when given the time to reflect on whether a sentence is true or not, humans reliably indicate that “A robin is not a bird” is false and “A robin is not a rock” is true. Can these seemingly contradictory observations be reconciled? Are LLMs like humans or not?

One perspective, popularized by Daniel Kahneman, is that humans have multiple modes of processing: a faster mode driven by heuristics (“System 1”) and a slower mode that’s capable of more deliberation and reflection (“System 2”). (Or, if you’re not a fan of binary categories, cognition lies along a “spectrum” with those descriptions at the poles of that spectrum.) Under this interpretation, the initial insensitivity to negation reflects the faster, heuristic-driven mode (System 1), whereas the ability of humans to make correct judgments upon reflection reflects the slower, deliberate mode (System 2). Since BERT’s behavior is more like those initial stages of processing, one could draw the conclusion that BERT (and maybe other LLMs) resemble “System 1” processing but not “System 2” (and indeed, I’m not the first to make this comparison).

However, while I do think this comparison is illuminating, I wouldn’t jump to conclusions about the overall capacities of the systems being compared (i.e., humans vs. LLMs). Instead, I think it’s more helpful to think of this as a question about which processes are engaged in which tasks or at which times. Under time pressure, humans rely on cheap tricks; given time to reflect, humans can think more deeply. To me, predicting the next word in a sentence like “A robin is not a ____” feels more like the former. Is there a sense in which LLMs could be given “time to reflect” as well?

Time to think

The answer, so far, seems to be yes.

Asking LLMs to spell out their “reasoning” (as in chain-of-thought prompting) step-by-step generally improves their performance.1

Similarly, asking an LLM to output intermediate “reasoning” steps onto a separate scratchpad results in a boost to performance.

Training LLMs with a “pause token” again seems to yield performance improvements.2

Clearly, none of these are exactly the same as the processes involved in human reflection and deliberation. Indeed, one could even view them as “hacks” or “workarounds”, which are only necessary because we started from something completely unlike human cognition.

But that’s not exactly my view. I think they’re interesting examples of trying to approximate the remarkable complexity of human cognition with architectural innovations that—and I do think this is important—correlate with measured improvements on benchmarks designed to assess linguistic or mathematical abilities. They’re part of a broader trend towards embedding the base LLM mechanism (what we think of as “auto-complete”) into something like a cognitive architecture.

As I wrote above: I’m not sure how productive it is to assert that LLMs are or are not capable of doing something (e.g., understanding negation). It’s a convenient shorthand, but LLMs—and humans—are not just one thing. Rather, it might be more helpful (and certainly more precise) to ask which kinds of contexts or inputs facilitate which kinds of behaviors. I fall short of that terminological precision pretty often myself, but it’s something I’ll try to keep in mind moving forward.

Related posts:

There’s plenty of debate about whether this process is faithful to the underlying “reasoning” process, but in general there’s a reliable boost to performance on tasks like word problems. This paper, and a follow-up with the same first author, are a great pair to start with.

For a moment there, I thought the post would go in a different direction, i.e, that you were going to connect this phenomenon with the literature on how negation is also very difficult for (a) iconic depiction (Barwise, Abell, Aguilera, etc.), (b) mental models (Johnson and Laird, etc.) and (c) non-human cognition (J. L. Bermúdez, Jorge Morales Ladrón de Guevara, etc.). In other words, it is very hard to represent negative information but in symbolic format. However, it is usually taken for granted that most cognition is not symbolic. This seems paradoxical.

My own take on this is that negation is not a single phenomenon and that different sort of information that we may call 'negative' are cognitively processed in different ways. Some of it will be symbolic, but other will not. For example, some 'negative information' is actually architectural, i.e., it results from constraints in how information flows among modules. Thus, for example, we 'know' that today is not a place because temporal information is processed in relative isolation from spatial information. However, of course, this is not going to work for all sorts of negations.