The year is 1770, and an alleged artificial intelligence is on display in Schönbrunn Palace, Vienna. Its creator, Wolfgang von Kempelen1, announces that this chess-playing automaton is open for challengers. He also invites skeptics to inspect the contraption for evidence of tricks or deceit—opening the doors and cabinets to highlight that nothing, or no one, hides inside.

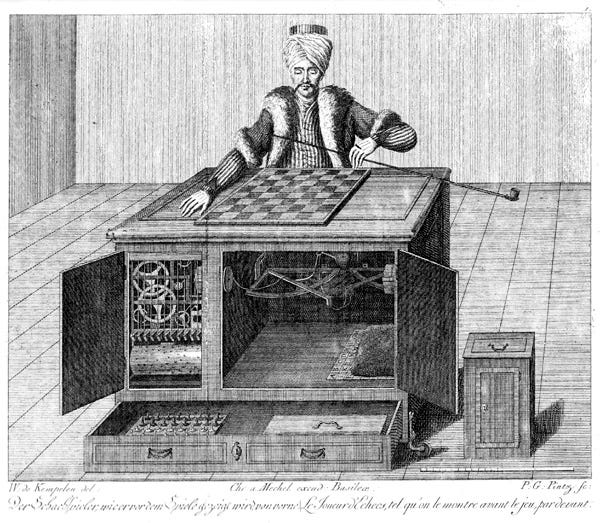

The contraption is called the “Turk”. Its design is very elaborate, featuring a life-sized model of a human head and torso, clad in robes and a turban (see the image below), along with elaborate networks of gears and clockwork that suggest a complex set of machinations lay “under the hood”, so to speak. It can even “converse” with spectators using a rudimentary letter board in English, French, and German.

The Turk’s first opponent is quickly defeated, as are its other challengers. In the following decades, it—along with Kempelen—enjoys a somewhat haphazard tour of Europe, where it encounters (and often defeats) other challengers. It also meets with skeptics, who insist that somewhere within its network of gears must be a human intelligence—some homunculus pulling the puppet springs.

Yet despite these skeptics, the Turk continues to impress opponents and spectators—including Napoleon Bonaparte—after Kempelen’s death. The machine continues changing hands, eventually ending up in the Peale Museum in Baltimore. In 1854, at the age of 84, the Turk is destroyed in a fire.

The year is 1920, and Czech writer Karel Čapek has just released his play Rossum’s Universal Robots. The play features a storyline that’s now commonplace. A group of artificial agents (“robots”) are created to perform factory work more efficiently; although they are initially happy to perform their labor, they eventually revolt and extinguish the human race.

The play also contributes to the popularization of the word “robot”—the precursor to the now-common “bot”—which is derived from a Slavic word meaning forced labor.

The year is 2005, and a new website called Amazon Mechanical Turk (or “MTurk”) has just launched. The conceit is simple: there are many tasks that a human can perform easily, but that are quite difficult for a machine. These “human intelligence tasks” (or HITs) include jobs like transcribing recorded speech, tagging various images, and filling out surveys. In turn, there are many humans looking for an extra buck or two, who are perfect candidates to perform these HITs.

MTurk is a service for matching “Requesters” (potential employers) with “Workers” (potential employees). It grows quickly in the first couple of years. By 2007, there are over 100K workers; by 2011, that number has grown to 500K.

In true gig economy fashion, enterprising Requesters construct novel tasks, and clever Workers engineer novel solutions with which to solve them. The website has a plethora of use cases. But over time, two modes of production become particularly dominant: human-subjects research (e.g., online psychology experiments and surveys) and dataset creation (e.g., tagging sentences and images for supervised machine learning).

The former use case (human-subjects research) stirs up some controversy at first—psychologists are used to conducting their studies on undergraduate students at major R1 universities, after all—but evidence grows that MTurk Workers are at least as attentive as undergraduate psychology students, and also considerably more representative of the broader USA population. A new participant pool has been unlocked.

The latter use case (annotation for supervised machine learning) seems at first like a free lunch, or at least a very cheap one. Supervised machine learning involves training a model to predict some kind of output from a set of features. For example, the “output” might be a label for an image (is this a cat or a dog?). The main challenge in this research is that training such a model requires a labeled dataset, and acquiring those labels is time-consuming and expensive. MTurk, it seems, provides an endless population of Workers ready to deploy their labor at a low cost. These labels are used to train increasingly sophisticated machine learning systems, some of which are deployed in commercial applications.

Meanwhile, concerns about fair practices arise; some researchers build tools to empower Workers; time marches on.

The year is 2018, and the term “Potemkin AI” is coined to refer to the automated systems enabled in part through MTurk’s web of Requesters and Workers. Author Jathan Sadowski writes:

Given that the name Mechanical Turk explicitly references the 18th century hoax, it appears that there is no intention to deceive users about the flesh-and-blood foundations of the system. MTurk is up front about how work is outsourced to real live humans…In this case, Potemkin AI provides a convenient way to rationalize exploitation while calling it progress.

An important thread of this critique is that the defects of Artificial Intelligence systems can be masked by offloading much of the “intelligence” to humans, hence the term “artificial artificial intelligence”:

In addition to outsourcing menial tasks, Irani explains how Potemkin AI like MTurk has helped compensate both technically and ideologically for the shortcomings of actual AI in completing cognitive tasks “by simulating AI’s promise of computational intelligence with actual people.”

There is, of course, something extremely strange about this scenario: the point of AI, ostensibly, is to replace the need for humans to perform particular tasks; thus, to the extent that a given automated system relies on human judgments for its training—or even, more drastically, for its moment-to-moment performance—one wonders where, in fact, the intelligence in such a system lies.

And also—and yet—this kind of distribution of responsibility and credit is by no means a new issue: any industrial system that seeks to automate or, at the very least, standardize the mode by which some output is produced almost by definition reduces that production process to something mechanical, narrows the ways in which humans can contribute their own labor to that process—such that it is no surprise to watch that aperture of human involvement shrink smaller still.

The year is 2022, and a team of psychologists publish the following paper in Perspectives on Psychological Science:

Webb, M. A., & Tangney, J. P. (2022). Too Good to Be True: Bots and Bad Data From Mechanical Turk. Perspectives on Psychological Science, 17456916221120027.

Apparently, this new pool of research participants is not without its problems. The authors present evidence that a non-trivial proportion of their sample—indeed, the majority—is “bad data”, i.e., observations contributed either by inattentive humans speeding through a survey or automated computer programs (“bots”).

The authors employ various exclusion criteria to identify how much of their sample falls into this category, and conclude:

This is a summary of how I determined that, at best, I had gathered valid data from 14 human beings—2.6% of my participant sample (N = 529).

In other words, approximately 97.4% of the sample is classified—according to the authors’ criteria—as “bad data”.

Debates can and will be had about whether this number overestimates the degree of fraud; some of the exclusion criteria are arguably overly strict. But regardless of the precise value, the number is likely quite large. To make it personal: in recent work, we used “attention checks” to identify problematic participants, and ended up excluding approximately half the initial sample.

As of June 14, 2023, the most recent entry in this saga is a paper with the title:

Artificial Artificial Artificial Intelligence: Crowd Workers Widely Use Large Language Models for Text Production Tasks

A Tweet thread on the topic can be found here, but the gist is also nicely summarized by an excerpt from the abstract:

We reran an abstract summarization task from the literature on Amazon Mechanical Turk and, through a combination of keystroke detection and synthetic text classification, estimate that 33-46% of crowd workers used LLMs when completing the task. Although generalization to other, less LLM-friendly tasks is unclear, our results call for platforms, researchers, and crowd workers to find new ways to ensure that human data remain human, perhaps using the methodology proposed here as a stepping stone.

In other words: about 40% of crowd work is already produced using Large Language Models (LLMs) like ChatGPT.

Hence the title: if MTurk “HITs” were “artificial artificial intelligence”, then bot-completed HITs are artificial artificial artificial intelligence.

The year is 2023, a number of papers are written and disseminated on the topic of replacing human participants with LLMs on topics as diverse as sentiment analysis, bot detection2, and even moral norms. I’m working on a paper of my own, exploring a topic I’ve written about recently: augmenting psycholinguistic datasets with LLM-generated norms.

There are a number of reasons why researchers might turn to such methods, such as cost and ease of use.

But another argument might go something like this: almost 50% of what researchers are paying humans to do on platforms like MTurk is already LLM-generated; why not go one step further and pay less to acquire data that’s explicitly LLM-generated—particularly if that data is generally aligned with human data?

Counterarguments exist, and so do counter-counterarguments.

LLMs are trained on a biased sample of mostly written English, so have limited generalizability (but human samples lack external validity too).

LLMs don’t do a good job approximating human behavior (but sometimes they’re better-correlated with human averages than the average human).

LLMs are too different from humans at a mechanistic level, so it simply doesn’t make sense to study humans by studying LLMs (but nearly all biologists rely on model organisms that may or may not be fully representative of the target population; plus, if the LLM quacks like a duck, why can’t we use it to study ducks?).

For now, it might still be possible to distinguish LLM-generated data from human-generated data. But I’m not confident about how long this equilibrium will last. And as LLM-generated data gets closer and closer to approximate human data, researchers interested in human behavior and cognition will have to ask hard and potentially unanswerable questions about what it means to shift our focus from the thing itself to a model of the thing.

Kempelen also created a speech synthesis machine, which, unlike the “Mechanical Turk”, actually did represent a technological advancement in its field.

Here, one can’t help but imagine a kind of automated panopticon of bots watching bots, applying their own inscrutable classification thresholds and sorting what they see into one pile and another.