Results from poll #5

Another tie—plus some updates.

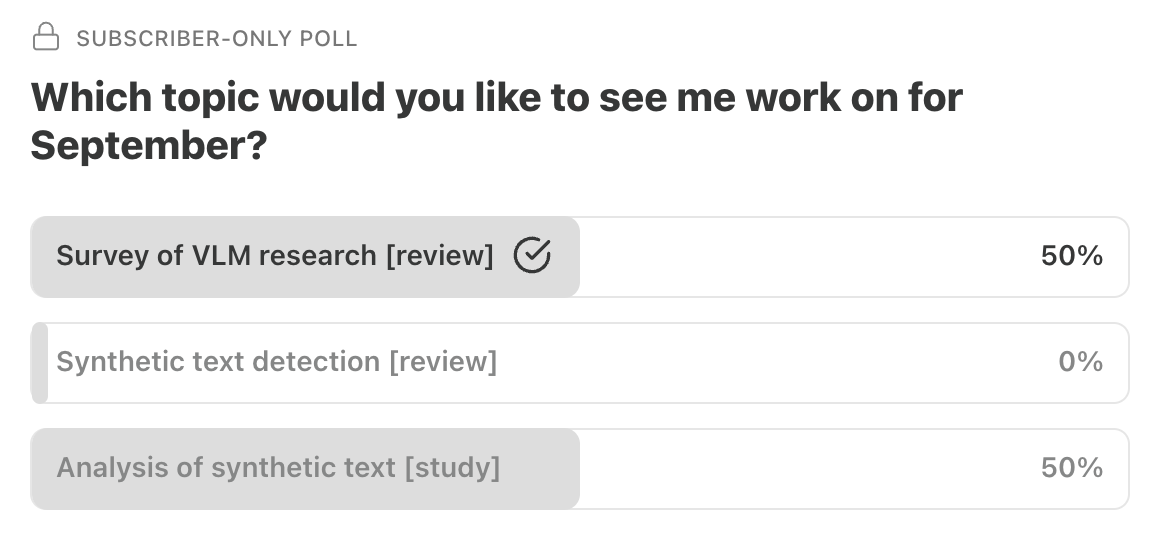

This month’s poll was between three options:

An explainer on vision-language models (VLMs).

A survey of methods for synthetic (i.e., LLM-generated) text detection.

An empirical study assessing properties of embeddings for both synthetic and natural (i.e., human-generated) text.

The results were tied between the VLMs explainer and the empirical study.

As with poll #4, I think the most reasonable course of action here is just to do both, since there’s clearly interest in both. I’m still deciding which one to start with, but over the next few months, you can expect an explainer on VLMs and a write-up reporting the results of my empirical analysis of synthetic (and natural) text.

Additional updates

I like to use these posts as an opportunity for general updates. In this case, I’ve published a few new papers that might be of interest to readers of this newsletter.

The first (“Do Multimodal Large Language Models and Humans Ground Language Similarly?”) is a collaboration with Cameron Jones and Ben Bergen. It’s a follow-up to a conference paper I published with Cameron earlier in the year, and addresses a similar question but in considerably more depth. You’ll likely read more about it in the VLMs explainer!

The second (“Large Language Models and the Wisdom of Small Crowds”) was published in Open Mind, and asks whether LLM-generated judgments about words and their meanings can be used to approximate human judgments. Specifically, I’m interested in whether LLM-generated judgments capture the “wisdom of the crowd”. Put another way: in terms of judgment quality, how many human judgments is a single LLM judgment “worth”? The short answer is that LLM-generated judgments are in general of higher quality, and the “crowd” they seem to reflect ranges in size from about 3 to 10 (so rather small).

Third, I have another collaboration with Cameron and Ben, this one published in TACL and tackling the question of whether LLMs have a “Theory of Mind” (something I’ve written about before). We brought together several different tasks used to assess Theory of Mind in humans, and we found that the LLMs we tested showed mixed performance across those tasks. (Note that we were interested in “vanilla” LLMs, i.e., those trained without RLHF; I expect that newer models with more complex training protocols would do better.)

Interesting reading

Something I tend to enjoy is when writers aggregate links to some of what they’ve been reading recently (e.g., Tyler Cowen’s daily links on Marginal Revolution). So just in case anyone is interested, here are some blog posts and papers I’ve especially appreciated in the last week or so:

Cosma Shalizi on “Attention”, “Transformers”, in Neural Network “Large Language Models”. Shalizi is a statistician at CMU and it was fun to read a statistician’s perspective on mechanisms like self-attention and how they relate to older methods in machine learning, like the “kernel trick”.

Excerpts from Darwin’s observations on his children. There’s something really touching about Darwin’s clear dedication and interest in the development of his kids, and the level of almost clinical detail with which he reports it feels like true devotion.

This 1998 paper from Virginia de Sa (one of my colleagues) and Dana Ballard on “Category Learning Through Multimodality Sensing”. They suggest that humans may exploit temporal correlations across input modalities (e.g., vision and audition) to construct categories. I’ve discussed something similar in posts here, but it’s always humbling to see that an idea has already been formalized and proposed decades ago.

The Wikipedia page about Marie Curie. I didn’t realize her daughter Irène Joliot-Curie also won the Nobel Prize (along with her daughter’s husband).

The Wikipedia page on Eumaeus, from The Odyssey. Eumaeus was Odysseus’s swineherd, and he helps out Odysseus when he comes back to Ithaca. What got me intrigued was noticing that Homer directly addresses Eumaeus several times in the text (e.g., “You answered him, Eumaeus”), and I found this pretty surprising. I didn’t really find a great explanation for it, but I liked reading more about Eumaeus nonetheless.

Thanks for reading!