What if we ran ChatGPT "by hand"?

A thought experiment to probe the boundaries of our intuitions.

Note: While drafting this piece, a great paper by Harvey Lederman and Kyle Mahowald came out in Transactions of the Association for Computational Linguistics (TACL) that relates to the question considered here of how exactly to construe LLMs. I’m planning to cover that paper, as well as a few other recent philosophical papers on LLMs and what (if anything) they “mean” (e.g., this one), in a post soon.

In a recent post, I wrote about the metaphors we use to characterize LLMs, our tendency to view them in human terms, and how that might affect our sense of what they “know” or “understand”.

Here, I want to explore this question from a slightly different perspective. I’m working on a thought experiment about LLMs, and I want to present it in this newsletter first. The point of the thought experiment isn’t to definitively demonstrate that LLMs do or don’t know things—rather, the goal is to refine our own sense of what it means to “know” in the first place.

I should also emphasize that this thought experiment is very much a work in progress. It’s more a metaphor than a proper thought experiment at this point, and I’m not sure what (if anything) it convincingly demonstrates. But I still think it’s interesting, and hopefully you do too.

What’s the point of thought experiments?

A “thought experiment” isn’t really an experiment in the typical sense. We don’t collect empirical data or analyze it with statistical tests, and we certainly don’t randomly assign individuals to different experimental conditions—the hallmark of a true experiment. In my view, even the best thought experiment can’t uncover empirical truths about the world. What, then, is the point? What kind of knowledge does a thought experiment give us? Are thought experiments valuable at all?

Personally, my view is that to the extent that thought experiments do have value, it lies in probing (and helping form) our intuition. A good thought experiment often starts with some shared assumption or belief about a concept, then describes a hypothetical scenario in which that assumption is called into question.

For example, John Searle’s “Chinese Room Argument” starts with the assumption that behavior indistinguishable from the behavior we’d expect from a person is itself evidence of humanlike intelligence (i.e., the Turing Test). If you haven’t read the article, I highly recommend it: it’s quite short.

In the article, Searle invites us to imagine a person communicating with someone inside a room through a series of notes, written in Chinese; the person inside the room doesn’t know Chinese, but does have a large “rule book” instructing them how to respond to various strings of symbols that they encounter. To the person outside the room, it appears that the person inside understands the Chinese—but according to Searle, that’s not true: they’re just manipulating symbols without knowing what they mean. Similarly, he argues, a computer program that interacts with a human operator through written language doesn’t really understand the language they’re producing in the way that person would.

Much has been written about the Chinese Room, and Searle himself considers a range of possible counterarguments in his original article. (Not everyone agrees, for instance, with Searle’s intuition that the person in the room doesn’t understand Chinese—alternatively, some argue that the room understands Chinese, even if the person doesn’t.) My point here isn’t that this intuition is right or wrong. Rather, the point is that setting up this scenario encourage us to think a bit more carefully about what we mean by “understanding”.

It’s not lost on me—or on many others—that LLMs are an interesting modern test case of the Chinese Room thought experiment, even though it was conceived before LLMs were as impressive as they are now. Here, I want to explore an alternative perspective on LLMs that holds constant their performance but systematically manipulates some parameters of their behavior, in the hope that this will pump our intuition for what they are and whether they understand.

Setting the stage

What happens when we interact with ChatGPT?

A large language model is, at its core, a bunch of matrices of numbers (weights) that takes some text as input (“I like my coffee with cream and ___”) and produces a prediction about the next word (e.g., “sugar”). The weights themselves are learned by training the model to make these predictions on a very large (trillions of words) corpus of text (see my explainer with Timothy Lee for a more detailed description).

This generative process can also be applied iteratively: once a high-probability token (e.g., “sugar”) is sampled from the model, we can add that token to the context (“I like my coffee with cream and sugar”) and sample yet another token (perhaps a comma: “,”). We can continue this process again and again until it looks like the model has been used to generate an entire sentence or even a paragraph. Maybe something like below, where the bolded text represents everything hypothetically generated by an LLM:

I like my coffee with cream and sugar, but sometimes I take honey instead.

ChatGPT incorporates lots of other bells and whistles, like reinforcement learning from human feedback (RLHF). But fundamentally, the description above is a fair account of what’s going on when ChatGPT “replies” to something we’ve said. Because the language model’s output is conditioned on the context (i.e., a question we asked), this reply often sounds pretty sensible. It’s also worth noting that this process all occurs very quickly—often within seconds. Under the hood, a huge number of calculations are being performed, but that’s all hidden to the user.

What if that weren’t true?

BabelGPT

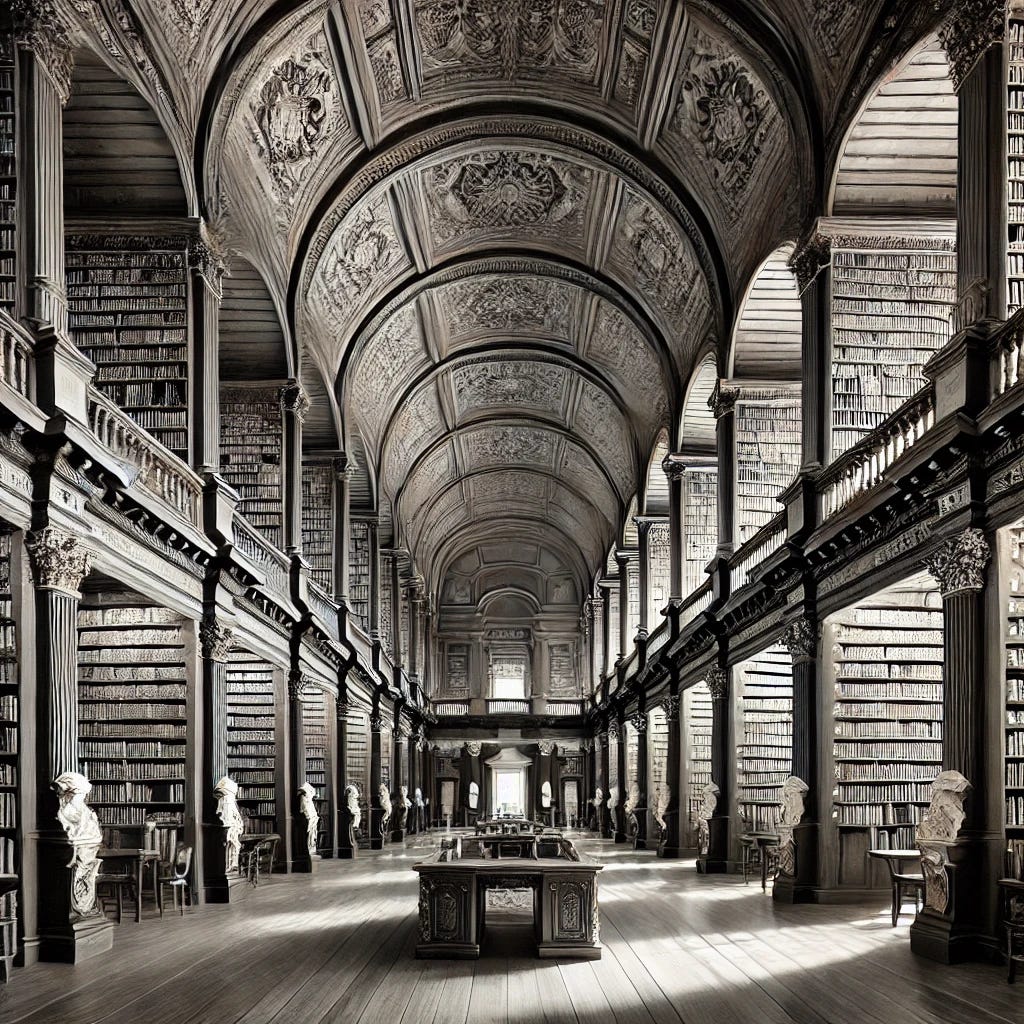

One of my favorite short stories is The Library of Babel by Jorge Luis Borges.1Just in case you’re not familiar with the story, the key premise is that the narrator’s reality is constituted by a vast library of books. These books allegedly contain every possible combination of characters in every possible order. That includes absolute nonsense, but it also includes, for example:

…the detailed history of the future, the autobiographies of the archangels, the faithful catalog of the Library, thousands and thousands of false catalogs, the proof of the falsity of those false catalogs, a proof of the falsity of the true catalog, the gnostic gospel of Basilides, the commentary upon that gospel, the commentary on the commentary on that gospel, the true story of your death, the translation of every book into every language, the interpolations of every book into all books, the treatise Bede could have written (but did not) on the mythology of the Saxon people, the lost books of Tacitus.

In other words: if you’re looking for a particular book—if you can imagine that book existing—then it surely exists, in some form, within the expanse of the library. But the library’s sheer size2 makes it unlikely you’ll find the book you’re searching for. You might find what appears to be the book, but it’s more likely than not one of many false copies that will merely lead you astray.

As with the Chinese room, I really do recommend reading the actual story—it’s quite short, Borges is an incredible writer, and it’s a truly deep account of the nature of knowledge and of life itself.

It’s also a convenient metaphor for LLMs. In this article, Isaac Wink (a Research Data Librarian) points out that the unpredictability and unreliability of LLMs has a natural counterpart in the search for the “correct book” in the library—and that this is a more appropriate analogy than, say, a calculator or a search engine:

ChatGPT’s predictive responses based on training data certainly prove more accurate than random combinations of letters, but they both lack a firm relationship to truth.

This post isn’t about the Library of Babel. But I think it’s a useful starting point for what I do want to focus on: a series of thought experiments revolving around alternative construals of ChatGPT.

ChatGPT “by hand”

In the Library of Babel, the chance of finding what you’re looking for is practically hopeless. But let’s suppose we’ve invented a series of operations that allows us to effectively narrow our search:

First, we translate our question (the thing we’re looking for) into numbers.

Next, we multiply those numbers by a bunch of other pre-defined numbers.

We then translate the output back into language.

Steps 1-3 are a single “forward pass” of our system. We might decide to repeat those steps any number of times until the process is complete—perhaps signaled when the process generates something like an <END> token.

Now, the output of this process isn’t necessarily the book we’re looking for. In fact, these are operations for generating text—not retrieving it. But if we take the hypothetical Library of Babel’s vastness seriously, then the distance between generation and retrieval doesn’t seem so great: after all, the hypothetical library contains books with every possible combination of characters, so “generating” new strings is tantamount to “retrieving” them (as if from some Platonic realm). So depending on your perspective, we’re either constructing or retrieving text that’s somehow relevant to our initial query. (Though crucially, as Isaac Wink points out, that response may not be true or accurate!)

Having established our framing, we can begin to play with its parameters.

In our first configuration, let’s suppose the operations described in steps 1-3 are all defined on pieces of paper. There are tons of these operations, unfortunately—each “word” is represented by thousands of numbers, and then there are upwards of one trillion multiplicands that we’ve got to multiply those representations by—enough to fill billions of hand-written pages.3

It gets worse: in order to execute just a single “forward pass” of our system, we’ve got to perform all these calculations by hand. Even if we assume you’re working around the clock, I’m pretty sure doing this by hand will take you thousands of years.4 And that’s just for a single forward pass—to get a whole response, we’ll need to do hundreds or thousands of those forward passes. So we’re looking at many lifetimes of work here: maybe this isn’t so different from Borges’s story after all!

But my interest here isn’t whether or not this is an efficient or even feasible process. Rather, I’m curious how describing ChatGPT this way changes (if it does) our intuition about its underlying abilities. This scenario has a few key differences from our customary interactions with ChatGPT, despite consisting of the same operations. First, it’s clearly much slower: ChatGPT responds within seconds. Second, we’re calculating everything by hand, rather than letting a system calculate it for us. And third—a prerequisite to the second point—the parameters themselves are completely transparent, rather than being proprietary.

Personally, I have a couple of immediate reactions. On the one hand, this thought experiment is a remarkable demonstration of how efficient modern computing has gotten. Matrix multiplication really can be done very quickly! On the other hand, it also seems to demystify (or perhaps “disenchant”) the process. Even though we’ve likely heard LLMs described as “matrix multipliers”, there’s something different about actually imagining yourself multiplying the matrices, one by one.

It’s even reminiscent of the Chinese Room argument: in both cases, a person dutifully follows a bunch of complicated operations to derive a sensible-sounding response to some input. The difference, to my mind, is that I’m not really interested in making an argument about whether the person “understands” what’s going on. I’m explicitly tackling the question of whether the process itself is constitutive of understanding.

I should note that there’s still a sleight of hand in how I set this up: after all, where did the weights themselves come from? I’ll return to this later—but before that, I’ll discuss another possible configuration.

An army of librarians

Above, we had to do everything ourselves. Now, let’s suppose there are a bunch of people specifically employed to speed it up (let’s call them Librarians). These Librarians work at the same pace, but they can also work in parallel—at least to some extent—and we have enough of them working 24-hour days such that a single forward pass can be done in about one hour.5 Finally, executing the operations requires a special kind of arcane, esoteric knowledge that only the Librarians possess.

From our perspective, then: we enter the library with a question, which we pose to the head Librarian in natural language; this kicks off a process whereby a veritable army of Librarians begins working on our query; and then in about an hour, the original Librarian returns with our response.

We know, on some level, what’s going on behind the scenes: each of those Librarians is multiplying a bunch of numbers together on paper. But now it’s much faster, and we’re not doing it ourselves—in part because the knowledge of how to do so is a well-kept secret. This scenario is maybe a little closer to how it feels to use ChatGPT: we might have a rough sketch of what the system is doing when it produces a response, but we couldn’t reproduce that process ourselves.

But where did the weights come from?

ChatGPT “replies” to our questions by performing a series of mathematical operations. In the scenarios above, I analogized this process to searching a vast library (like the Library of Babel), and asked whether our intuition about ChatGPT’s ability to “understand” language changed depending on whether:

We performed those operations ourselves.

The operations were performed by an army of librarians.

The operations were performed by a computer.

In each case, the operations and output are the same—all that’s changed is how quickly and transparently they are implemented, and who (or what) implements them. I’m still undecided with respect to how these different parameterizations affect my intuition, or whether they should. I’d love to hear what you think!

But as I mentioned earlier, there’s a sleight of hand going on here: I haven’t explained where the operations themselves came from. I wrote:

But let’s suppose we’ve invented a series of operations that allows us to effectively narrow our search…

Thought experiments always involve some presuppositions. Yet I wonder whether this particular presupposition might make a difference to our intuitions about the resulting system. How exactly were these operations “invented”? Were they handed down from on high? Did a well-funded team of graduate students code them all by hand, as the philosopher Ned Block describes in his famous “Blockhead” thought experiment? Were they learned through experience? Or did we just roll the dice and happen to produce a winning roll on our first try?

I suspect some people are more likely to attribute “understanding” to a system whose operations were acquired through some kind of learning and experience. That is, it’s not just the operations themselves (or the behavior they produce): there’s something important about how the system arrived at those operations. Some “modes of arrival” seem be more plausible than others. For instance, maybe it matters to us whether the way an LLM learns about words is somehow grounded in the same (or similar) causal-historical relations that underlie actual human usages of those words.6

To be clear, I don’t know whether this is the right intuition. It’s a more subtle version of what I’ve previously described as the “axiomatic rejection” view, which might also be called an “anti-behaviorist” or “anti-functionalist” view. According to this view, it’s not just what a system does that matters—it’s how it does it, and how it came to be that it does that way. But I do think a non-trivial number of people hold some version of this view, or would at least defend its viability, so it’s important to acknowledge as a factor that might be affecting our intuition here.

On the difficulty of building intuition about LLMs and what they do

The goal of thought experiments is to “pump our intuition” about a concept. Often the philosopher’s goal is to convince us that a certain intuition is right or wrong—but in this case, I was primarily just trying to explore the territory, and I’m not sure where we should land. That might mean my thought experiment isn’t a very good one, but I hope it’s led readers down some interesting rabbit holes. I continue to think there’s something potentially useful about considering the parameters I laid out: how fast the system responds; how much we know about how the system works; and whether we’re implementing the operations ourselves.

The reason this matters is that there’s still widespread disagreement about the underlying capacities (both potential and actualized) of LLMs, and I think that disagreement is rooted in part in different intuitions about the kind of thing LLMs are. My hope is that a combination of both empirical and theoretical work—like thought experiments—can take us closer to developing intuitions that are useful and (ideally) more accurate than the ones we have now.

Related posts:

I’ve written about Borges before, in my article on learning and forgetting.

Along with the fact that in Borges’s story, the book might have been destroyed by “Purifiers” who travel through the library burning books they deem to be nonsense.

Spelling it out: if we assume the system (LLM) in question has about 1 trillion weights, and we assume each piece of paper can represent a few hundred weights (let’s say 400), that means we’ll need at least a few billion pieces of paper. We might go further and say each layer of the LLM should get its own binder, and within that binder there’s a section for all the feed-forward network weights and each of the attention heads’ weights.

Some back-of-the-napkin math, aided (ironically) by ChatGPT: if we assume you can perform about 6 of these operations per minute, then you can perform a little over 8K per day. At this rate, 1 trillion operations will take you about ~115M days to complete—or about ~300K years. Of course, I’m making some big assumptions here, e.g., that you’re multiplying an input word vector by each weight independently instead of doing a more efficient matrix multiplication. But since we’re doing it all by hand, that assumption doesn’t seem unreasonable to me (i.e., an individual person still needs to calculate all the row-wise multiplications and summations independently). Further, these estimates were obtained assuming a single forward pass with a single input word vector. In reality, the number of operations will increase with the number of inputs—quadratically, in the case of self-attention. Nonetheless, if anyone can spot an obvious error here that radically changes the order of magnitude estimation, please let me know! (Though I’m not sure the intuition depends too much on whether it’s thousands or hundreds of thousands of years.)

The actual number of Librarians you’d need depends on some of the other assumptions I’ve already made, as well as exactly how easily everything can be parallelized. But I’m going to guess that we’d need at least millions, if not hundreds of millions or even a couple billion, in order to produce a response within the hour.

This is the argument made by Linzen and Mandelkern in their recent paper on whether LLMs can “refer”, which I’ll be discussing at greater length in an upcoming post.

Love a good thought experiment, especially with reference to the cognitive scientist of our time (Borges). I thought you might explore what feels like a real-world analog to your musing -- the profession of "computer," which was a job title for actual humans (often non-white women) who did work in the ballpark of what you describe here. Also, who drinks coffee with honey? Gross.