Language models and language change: recent evidence

A review of emerging empirical data.

Back in July of 2022, I wrote a post speculating about whether the introduction of large language models (LLMs) into communications technology could end up shaping language itself.

The reasoning was straightforward: the more we allow our linguistic choices to be determined (or at least influenced) by an LLM, the more we might start to internalize those choices, such that they become the “default” words or grammatical constructions we turn to in a given context. This hypothetical scenario is importantly distinct from a scenario in which a higher proportion of linguistic context we encounter is LLM-generated. I’m interested in that as well (and its relationship to ideas like Dead Internet theory), but I’m particularly drawn to the question of whether our internal sense of language will change. At that point, we can refine the question further: will LLMs homogenize our language or fracture it into billions of tiny idioletcs?

Since I wrote that post, we’ve seen more than a few technological shifts: ChatGPT was released in late 2022, followed by other chat-based models like Claude; we’ve also seen the rise of “LLM agents”, practices like “vibe coding” and warnings about students cheating their way through college with ChatGPT; and finally, I can report that at least anecdotally, I encounter a great deal of content that has the “flavor” of being LLM-generated.

In the meantime, I’ve maintained an interest in that original question. I’m planning to write a longer academic piece about this soon, so I’ve tried to stay abreast of the literature on LLM-generated text, and I’m still working on connecting it to longstanding theories of technology-induced language change. In this post, I wanted to provide a brief review of some of that relevant empirical evidence, as I imagine it’s a topic of interest to many readers of this newsletter.

Refining the research question

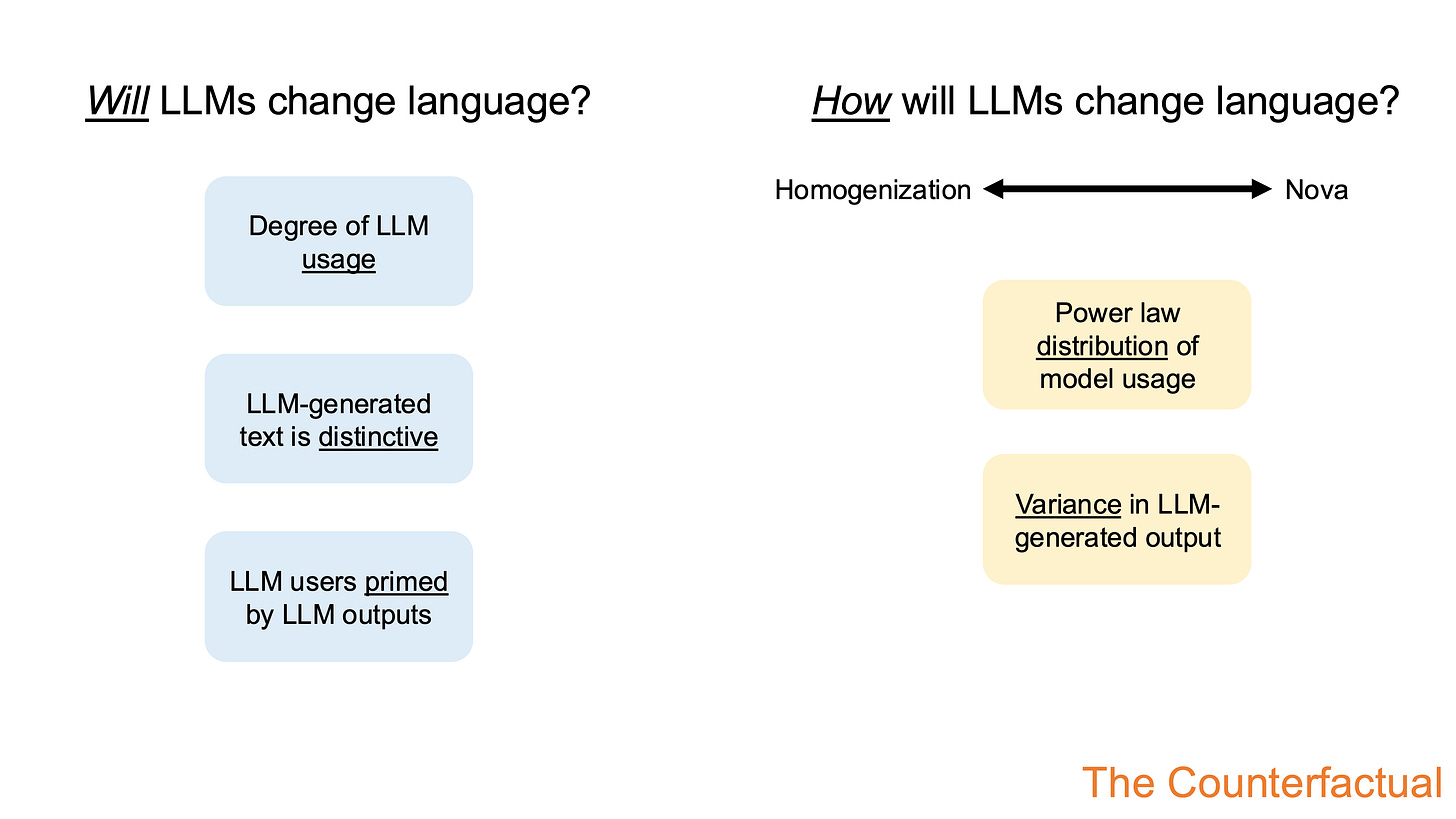

In order to figure out what evidence is relevant, it’s important to be clear about our research question(s). I’m interested in two broad questions here:

Will language models change language in some way?

How might language models change language?

Of course, no single study is capable of fully addressing either question.

For (1), the best we can hope for is to enumerate various preconditions of influence and ask which of them are satisfied. Briefly, we might expect an affirmative answer here to depend on at least three factors: first, the degree to which LLM usage permeates a particular sociolinguistic domain or multiple domains; second, the degree to which LLM-generated text is measurably distinct from human-generated text; and third, the extent to which LLM users are “primed” by the linguistic outputs of that LLM. That is, language change (at least in a particular domain of communication) depends on lots of people using LLMs in the first place, on LLMs producing content that’s somehow different from what someone might have produced in a counterfactual scenario without LLMs, and on users being primed by recent linguistic encounters (particularly those with LLMs).

For (2), we can start by formulating different hypotheses. I suggested two competing hypotheses in a previous post: the homogenization hypothesis proposes that widespread LLM usage will ossify certain linguistic practices in place, collapsing variance and preventing further change; the nova hypothesis proposes the opposite—namely, that widespread LLM usage will lead to a kind of “Cambrian explosion” of micro-languages, each finely tuned to the styles and practices of a given user (or a given LLM).1 Here we might look to a few sources of evidence to adjudicate between these hypotheses: first, whether most people use the same (non-customized) LLM or whether there are a multiplicity of models; and second, the extent of variance in LLM-generated content (particularly relative to analogous measures of human writing).

None of these pieces of evidence are easy to come by, but they’re critical for informing the bigger-picture questions about whether and how LLMs might change language. Measuring them also requires making certain assumptions about the relevant constructs, e.g., about how to assess “variance” in writing and what counts as an appropriate sample of human-generated and LLM-generated text.

In this post, I’m going to narrow my focus further to a subset of these pieces of evidence: first, on the alleged distinctiveness of LLM-generated text; and second, on the relative variance in LLM-generated output. There is actually good evidence for priming in human communication, but I think that’s a topic for another post—as with the factors relating to actual usage of LLMs.

As I discuss the evidence, there’s a big caveat I need to point out up front: any conclusions we draw from empirical results depend on the representativeness of both the human-written text and LLM-generated text in question. I’m increasingly skeptical of the idea that either of these are helpful unitary constructs: each human contains multitudes, and there are also lots of different humans. Similarly, there are many “LLMs” on the market, and each LLM can be prompted in numerous ways that might elicit different linguistic behaviors. I’m still not sure how to square these issues with the broader goal of, say, identifying signatures of LLM-generated text—it’s possible the best we can do is keep them in mind and limit our conclusions accordingly. I’ll do my best to be clear about what sample is being compared in each study and how constructs like variance are being assessed.

With that in mind, let’s turn to the evidence.

LLM-generated output is (somewhat) distinctive

The rise in LLM usage has, for a number of reasons, led to considerable interest in developing methods to detect LLM-generated text. As I’ve written before, I think this is a hard problem—there’s no reason in principle that LLM-generated text has to be distinguishable from human-written text. But even though I’m concerned about the actual application of these methods, this work has been helpful from the perspective of basic research: it turns out there are various signatures of LLM-generated text, some more interpretable than others.

I described some of these potential signatures in a recent post. Systems like DetectGPT rely on a notion called curvature, which the authors defined as follows:

This paper poses a simple hypothesis: minor rewrites of model-generated text tend to have lower log probability under the model than the original sample, while minor rewrites of human-written text may have higher or lower log probability than the original sample.

That is, because of the way applications sample tokens from LLMs, LLM-generated text will generally reflect something like a local maximum in probability-space: the observed sequence is more probable than other counterfactual sequences. The claim is that this is more true—at least in the samples used—for LLM-generated text than human-written text. Focusing on fake news articles generated by GPT-NeoX, the authors find that their method distinguished these articles from the original human-written articles with relatively high success.2

GPT-NeoX isn’t exactly state-of-the-art at this point. A more instructive example might come from recent work by some of my colleagues applying the curvature method to text generated by various LLMs in the Turing Test. Here, accuracy was about 69%, and all of the LLMs compared (including GPT-3.5 and GPT-4, both of which were prompted in multiple ways) had higher curvature on average than humans in the sample. Notably, this is better performance than the human judges in the original paper, as well as a separate pool of humans judging the conversations in an “offline” task.

Most importantly for our purposes, these results are consistent with the idea that LLM-generated text is “distinctive”: there exists at least one measure that distinguishes LLM-generated text from human-written text—in at least two different communicative settings (news articles and chat messages).

It’s also consistent with the original empirical analyses I presented in that same post. I found that words in LLM-generated passages were more predictable—as measured by independent open-source language models—than words in human-written passages. Words in LLM-generated passages also exhibited less variance in their predictability:

That said, in the Turing Test setting, average predictability was less effective at identifying LLM-generated messages (about 62% accuracy); and perhaps more crucially, messages generated by GPT-4 with the best-performing prompt were statistically indistinguishable from human-generated messages in terms of average predictability. That suggests that curvature might be a better indicator of synthetic text than outright predictability.

Another interesting piece of evidence comes from searching for specific linguistic features: beyond the average predictability of words, do LLMs choose certain kinds of words or certain kinds of grammatical constructions? One 2024 paper measured the different “syntactic templates” used by multiple LLMs, including closed models like GPT-4o and open models like OLMo. Text was generated from each model using multiple approaches, from entirely open-ended elicitation (e.g., prompting the model with a start token and sampling subsequent tokens) to more targeted tasks (e.g., summarization); the authors used human-written summaries as a baseline.

A “syntactic template” can be thought of as a higher-order abstraction describing some sentence. While two sentences might contain totally different words, they might correspond to the same parts of speech in the same order—and thus make use of the same “template”. That would be true, for instance, of the following two phrases:

a romantic comedy about a corporate executive

a humorous insight into the perceived class

According to a standard syntactic parser, both phrases use the “DT JJ NN IN DT JJ NN” template, which stands for (roughly) “determiner + adjective + noun + preposition + adjective + noun”. The authors used a parser to identify different templates in LLM-generated (and human-written) text; these were defined as a sequence of length n3 of part-of-speech tags that occurred some minimum number of times in a corpus. Then, they calculated the template rate for each model/task (the proportion of documents with one of those top templates), as well as something called the compression ratio: a measure of how much the set of part-of-speech sequences in a given corpus could be compressed. (The intuition here was that more compressible corpora entailed greater redundancy between sequences.)

The authors report a number of results, but two are most relevant to the discussion here. First, models generating open-ended text produced a lower rate of templates overall than models generating summaries: that is, the task mattered. Second, focusing specifically on the summarization task, models produced a much higher rate of templates than humans when summarizing movies—independent of the value of n used to define templates; this gap was reduced when analyzing biomedical summaries (the Cochrane dataset), possibly because the human-written summaries involved more templatic language in general.4

Another recent paper asked whether certain words have increased in frequency since the release of ChatGPT, above and beyond what you’d expect from extrapolating their pre-ChatGPT trends. Focusing on scientific abstracts in the PubMed database, they calculated a measure called “excess words”, which reflects the difference between the actual number of times a given word appeared in a given year and the expected number of times that word “should” have appeared that year; the latter measure was derived by extrapolating a linear trend from previous years. This allowed the authors to determine which words occurred more times post-ChatGPT than we would’ve expected in a world without LLMs.5 Some of the words they found are often cited as ChatGPT “tells”, such as delves or underscores; other examples included potential and crucial. Beyond suggesting that LLM-generated text might be distinctive, these results are also consistent with the idea that the introduction of a new technology (ChatGPT) correlates with a change in the composition of words we encounter. Moreover, other recent work suggests that this fact isn’t limited to scientific abstracts: a study of podcast transcripts and YouTube academic talks points at similar trends in scientific communication.

A final piece of evidence regarding the distinctiveness of LLM-generated text comes from this very recent (May 2025) paper exploring whether people can accurately distinguish human-authored vs. synthetic text. The authors recruited 9 annotators and asked them to read 30 human-written and 30 LLM-generated articles, indicating for each text whether they believed it was written by a human or LLM.6 Of these 9 annotators, five frequently used LLMs for writing tasks, while the other four had only limited experience. The authors found that the four with limited experience achieved an average accuracy of 56.7%—roughly random chance—though they nonetheless reported high confidence in their decisions. The five that frequently used LLMs achieved an average accuracy of 92.7%; moreover, the majority vote of these five achieved almost perfect performance (99.9%), which was considerably better than any of the automatic detection methods tested. Expert annotators also retained high accuracy in the face of various adversarial methods, including paraphrasing the LLM-generated text and attempting to “humanize” it (which involved explicitly instructing an LLM to avoid certain “tells”).

This is actually consistent with work from the Turing Test paper I mentioned earlier: although humans performed quite poorly on average, humans with more knowledge about LLMs and more experience playing the game performed better. Both results point to the conclusion that there’s something about interacting with LLMs over time that hones one’s detection ability. This suggests that LLM-generated text really might have its own signature, but it’s hard to identify it without extensive experience.

So what is it that those expert annotators are doing? Fortunately, the authors also asked each annotator to explain how they made their decision. These explanations are helpfully summarized in Table 3 of the paper. Here, two things stood out to me: first, expert annotators (unlike non-experts) seemed aware of which words in particular were overused by LLMs (like “testament” or “crucial”), and used those words as clues; and second, they pointed to consistent grammatical clues, i.e., predictable structural patterns (e.g., “not only …. but also …”)7.

We started this section by asking whether LLM-generated text is truly distinctive. For me, this evidence points to a tentative “yes”: at least right now, text generated by an LLM using a range of elicitation methods can be distinguished from human-authored text at a reasonably reliable rate; distinguishing factors include the relative predictability of LLM-generated passages, as well as the use of specific words (like “delves”), and syntactic templates. It is entirely possible that future LLMs or different ways of prompting current LLMs will produce text that is impossible to distinguish from human-authored text. It is also worth noting (again) that the very construct of “humanlike text” is a fraught one: there is, after all, no average human. But in the context of speculating about the practical and societal consequences of LLM usage, I think the evidence suggests at least one of the preconditions I mentioned earlier could be met.

Homogenization: a thought experiment

There are at least two ways that widespread usage of LLMs could change language: first, people might converge on a more similar set of expressions (i.e., the homogenization hypothesis); alternatively, each individual person might “drift” further and further from other people in terms of their linguistic idiosyncrasies, relying increasingly on LLMs to “translate” what they mean to interlocutors (i.e., the nova hypothesis8).

One way to get traction on this question is to ask whether LLM-generated text exhibits more or less variance (or diversity) than human-authored text. There are all sorts of ways one could operationalize “variance”, but I imagine most readers will have some sort of intuition for the underlying construct: different humans write in different ways, and an (arguably) important part of becoming a good writer is “finding your voice”; LLM-generated text, by contrast, is sometimes described as “slop” or “filler”, implying a kind of contentless homogeneity. But is it actually the case that LLM-generated text as a general category necessarily exhibits less variance than human-authored text?

Before I turn to the evidence, I’ll start with a thought experiment meant to complicate our intuitions.

Suppose that we compared a text corpus authored by millions of individuals to a text corpus (of the same size) authored by a single person: our intuition is that the million-person corpus probably contains more variance—both in the breadth of topics and the diversity of linguistic styles. At first glance, this might seem analogous to the case of comparing a bunch of documents written by different humans to a bunch of documents written by the same LLM. Clearly, we might say, the LLM-generated text will exhibit less variance.

The first complication concerns the scope of this inference. The intuition above rests on the assumption that the million-person corpus was produced by a greater variety of generative processes, whereas the LLM-generated corpus was produced a single process. That means the intuition doesn’t necessarily extend to comparing LLM-generated text to documents written by a single person: the inference only holds when comparing text produced by different people to text produced by the same LLM.

Second, and more importantly, it’s not actually clear that there’s a direct correspondence between a single-person corpus and an LLM-generated corpus. An LLM has ingested many more words than a single person; although these words are a biased sample of the remarkable variety of human languages, an LLM trained on billions or trillions of words has probably still encountered a greater variety of writing styles and perhaps languages than a random person. Further, there are many ways one could prompt an LLM, and also many ways one could sample tokens from the probability distribution (e.g., adjusting the temperature, using beam search, etc.). Once we take these additional factors into account, our intuition might be less clear.

Third, it’s conceivable that even a million-person corpus would be relatively constrained in terms of its topics and linguistic styles. Users of a specific forum, for instance (like Reddit), might converge on similar world models, similar interests, and similar ways of using language. My intuition is still that there’d be more variability between two posts sampled from different users than between two posts generated by the same LLM, but it also feels like there could be many exceptions to this.

All this is to say: our intuitions about LLM-generated text and homogeneity might not necessarily be valid, and we should do our best to ground our beliefs in the evidence—while still acknowledging the real limitations in empirically measuring these sorts of things.

Homogenization: the evidence

Based on my read of the emerging research literature, most (but not all) of the research seems to support the homogenization hypothesis (as opposed to the nova hypothesis).

One relevant piece of evidence comes from from a 2024 paper comparing writing produced with and without assistance from LLMs. Specifically, the authors looked at GPT-3 and InstructGPT: relatively “early” models, and less performant than GPT-4 or GPT-5, but state-of-the-art as of 2023 (when this work was done). They recruited participants online and asked them to write argumentative essays on different topics; subjects wrote the essays on the CoAuthor platform, which allows users to obtain text continuations contingent on text they’ve already written (which they can accept or reject). Subjects were assigned either to a control group (where they were not given LLM assistance) or one of two treatment groups, which allowed them to receive suggestions from either GPT-3 (a “base” model) or InstructGPT( a model fine-tuned on human feedback).

The authors then calculated a “homogenization score” for each condition, which was based on the textual similarity between all essays produced for a given condition/topic by different subjects.9 On average, essays written with assistance from InstructGPT showed significantly more homogenization than essays written without any assistance—or, interestingly, essays written with assistance from GPT-3 (the “base” model). Further, essays written with assistance from InstructGPT also involved more repetition of the same sequences: that is, two randomly sampled essays from the InstructGPT were more likely to contain the same 5-word sequence than were two randomly sampled essays written without LLM assistance.

There are some clear limitations to this work (like the fact that the models used are now outdated, and the fact that the CoAuthor interface seems pretty different from contemporary LLM usage), but one interesting conclusion is that there’s something about fine-tuning the model specifically that leads towards homogenization.

In fact, there’s a growing body of evidence that techniques like reinforcement learning with human feedback (RLHF) and instruction-tuning do actually reduce variance in LLM-generated text. These are methods used during “post-training” (i.e., after an LLM has already been “pre-trained” on a large corpus of text) to make the LLM better at following directions and avoid producing undesirable behaviors; for example, RLHF might “teach” an LLM to avoid producing sentences that a human would find hateful or otherwise offensive.

Conceptually, I can see how these techniques as currently applied would reduce diversity in LLM-generated text. Suppose we imagine a “base” model in terms of an attractor landscape: there are different valleys or basins that the model might “visit” depending on the input, and the overall shape of this landscape is determined by its pre-training. Now suppose we apply RLHF to this model, which encourages it to produce certain desirable behaviors and avoid undesirable behaviors: intuitively, this might have the effect of making some regions of the landscape much more “attractive” (the model will visit them more often) and others much less “attractive” (the model will visit them less often) or even disappear entirely (what Janus calls “mode collapse”), exaggerating existing structural variability in the landscape as a function of the RLHF process. This seems to match up with the evidence: instruction-tuned models show less entropy in their outputs (i.e., a sharper, less uniform probability distribution over tokens).

This, in turn, could explain all sorts of other empirical observations about text generated by RLHF-tuned models. Compared to base models, RLHF-tuned models use a smaller variety of proper names, nationalities, ages, and personality types. Similarly, other work on relatively state-of-the-art RLHF-tuned models (like GPT-4) has found that the stories they write seem to “recycle” a larger number of plot devices than stories sampled from human authors (as far as I know, this study did not assess stories produced by “base” models).

Now, it’s entirely possible some of this apparent homogenization is, as they say, a “skill issue”: presumably, more effective prompts could elicit a greater range of styles, plot contrivances, and proper names from an RLHF-tuned LLM. But the fact remains that the underlying probability distribution really does seem less entropic in RLHF-tuned LLMs; to me, that suggests that while better prompts might elicit more diversity, text produced by current RLHF-tuned models will still be relatively more homogenous than text produced by their “base” equivalents.10

This isn’t necessarily a bad thing! Presumably, we’re not simply looking for the most entropic distribution—if we wanted that, we could just sample words at random from the vocabulary. Indeed, raising the “temperature” in the sampling process does make text a bit more novel, but it also makes it less coherent. Ideally, we’d avoid homogenization while also ensuring that the text makes sense.

Moving forward, a major open question in this space is just how constraining this “attractor landscape” is, and just how successful different prompts or contexts can be in eliciting diverse behavior. For instance, a recent preprint suggests that providing sufficient contexts erodes some of the previously observed differences in textual variance between LLM-generated and human-authored text.

So will LLMs change language? And if so, how?

It’s impossible to answer either of these questions with absolute certainty.

To the first, however, we can already point to some positive examples, such as changes in the vocabulary composition of scientific abstracts and podcast transcripts. Further, LLM-generated text does appear to be at least somewhat discriminable, both by automated metrics and by humans with extensive experience interacting with LLMs. I didn’t discuss evidence for the other preconditions I proposed—the degree of LLM usage and whether human language use is reliably primed by linguistic inputs. But ChatGPT usage does seem relatively widespread (and growing), which suggests that the first precondition may well be met, at least in certain domains; and as I noted earlier, there’s good evidence that the words and grammatical constructions humans deploy are influenced by the words and grammatical constructions they encounter11.

The second question, I think, is harder to address, and the answer is even more contingent: how many customized LLMs do we expect to be in circulation in 1-2 years, and just how variable can the outputs of even a single LLM be? Right now, my sense is that the current equilibrium points towards homogenization: most people seem to use one of a handful of models, and (with some exceptions) mostly don’t seem to be invested in explicitly trying to elicit diverse linguistic behaviors from those models. And since there is good evidence that these RLHF-tuned models exhibit less variance, my takeaway is that the “default” scenario is more likely to be one of homogenization than what I’ve called the “nova” hypothesis. Put another way: if this were a betting market, I’d bet that we’d see more evidence for homogenization or convergence than fracturing over the next few years.12

Yet if there’s anything I’ve learned in the last 4-5 years, it’s that technological changes can take you by surprise—so I wouldn’t bet too much on this outcome.

Related posts:

In retrospect, one hypothesis I might’ve overlooked is what I’ll call the polarization hypothesis: the idea that people will begin using language that’s unlike LLM-generated text (like avoiding the em-dash).

They report an AUROC of .95, which means (roughly) that if you sampled a human-written and LLM-generated passage at random, the method would correctly rank them about 95% of the time.

The authors performed this analysis for multiple values of n, from 4 to 8.

Also relevant was their finding that, on average, LLMs produced more templates that were also identified in model training data than did humans.

The authors also validated this approach by testing it for other events that changed the frequency of certain words: for instance, the COVID pandemic led to (as you might expect) words like “pandemic” being used much more often than you’d expect simply by extrapolating pre-pandemic trends in their usage.

The authors conducted multiple studies, some of which contained texts generated by ChatGPT and some of which contained texts generated by Claude; the results were qualitatively similar in each study.

One annotator wrote:

"One pattern I’ve been noticing with AI, and I think I’ve stated this before, is the comparison of ‘it’s not just this, it’s this’ and I’m seeing it here, along with listings of specifically three ideas."

Thusly named for an analogous point made by Charles Taylor about religious choice in A Secular Age.

They used two different metrics here: Rouge-L, which is based on common sequences of words; and BERTScore, which compares the similarity of document embeddings in the BERT language model.

Once again, this doesn’t seem to me to be an in principle kind of thing. It seems conceivable that model designers could implement RLHF in such a way that this kind of homogenization did not occur—but I could be wrong. Janus also reports an interesting tale from the early days of OpenAI:

Another example of the behavior of overoptimized RLHF models was related to me anecdotally by Paul Christiano. It was something like this:

While Paul was at OpenAI, they accidentally overoptimized a GPT policy against a positive sentiment reward model. This policy evidently learned that wedding parties were the most positive thing that words can describe, because whatever prompt it was given, the completion would inevitably end up describing a wedding party.

In general, the transition into a wedding party was reasonable and semantically meaningful, although there was at least one observed instance where instead of transitioning continuously, the model ended the current story by generating a section break and began an unrelated story about a wedding party.

An open question here concerns the longevity of these effects, since they’re typically established in a laboratory setting. There is some evidence that structural priming can persist for as long as a week, though I don’t know how robust that effect is. It also may not matter that much if LLM usage is sufficiently pervasive: that is, if people use LLMs multiple times every day, they’re repeatedly “primed” by LLM linguistic styles.

I think it’s an interesting question how one would operationalize this kind of question in terms of clear, concrete predictions; if any readers are interested, let me know!

This is one of the most important linguistic frontiers of our time. We’re watching language compression at scale. Reinforcement tuning doesn’t just make models polite, it erodes semantic variance, producing what I call synthetic realness. That is language optimized for clarity, but stripped of friction. If enough people internalize that cadence, homogenization isn’t just stylistic drift, it’s a semantic fidelity collapse. A state where words lose their local texture. The future of language may depend on whether humans can preserve difference inside the machine’s flattening grammar.