GPT-4 (sometimes) captures the wisdom of the crowd

Many researchers rely on survey data. How could LLMs like GPT-4 change that?

Contemporary research on human behavior often involves asking groups of people (collectively called a sample) to respond to questions about some topic of interest. This research is used to inform academic theories, design new products, create marketing strategies, and train machine learning algorithms. The Internet has, of course, made all this much easier than it used to be: instead of calling survey participants on the phone—or mailing them a letter!—researchers can post a link online and collect hundreds or even thousands of responses in a matter of hours.

But as I’ve written before, there’s no free lunch. For one, survey participants have to be compensated in some way; this compensation is probably lower than it should be—see articles like this one for more in-depth reporting—but it’s a cost nonetheless. Perhaps more worryingly, much of this data appears to be increasingly artificial, i.e., generated by large language models (LLMs) like ChatGPT. And between the rapid explosion in LLM capabilities and their widespread availability, it’s become much, much harder to distinguish human-generated and LLM-generated responses.

This has led many researchers to ask: if LLM-generated responses are so humanlike, why don’t we just use those instead?

In this post, I’m going to tackle that question on a smaller scale, focusing on some empirical results from a recent preprint of mine. But I’ll also try to address some of the deeper issues at play:

What exactly is a “sample”, and why does it matter that it’s random and representative?

What would it mean for LLM-generated responses to be more correlated with the average human response than most individual humans’ responses?

How do LLMs fit into a theory of sampling—are they like individual participants, the aggregate of multiple participants, or something else altogether?

What are the dangers of relying exclusively on LLM-generated responses?

To start off, I’ll take a quick detour into the basics of human-subjects research.

What are “samples” and why do researchers collect them?

Researchers are usually interested in learning about a population. That “population” is really just whatever or whomever that researcher wants to know about: it could be as narrow as “current undergraduates at UC San Diego” or as broad as “all humans everywhere that have ever existed”. Moreover, researchers might want to know various things about that population, such as the average height, the distribution of food preferences, and the number of people who can read ancient Greek. Statisticians call these values—the numbers researchers want to know—the “population parameters”.

The problem is that unless your target population is very small, it’s virtually impossible to ask everybody what you want to know. Thus, researchers make a compromise: instead of recruiting everyone in the country, they recruit a smaller sample of people, which yields what statisticians call a “sample statistic”. The trade-off is that sample statistics are basically never identical to the population parameter: there’s always some amount of sampling error.

To give a simple illustration: suppose your target population contains 1000 people, and the average height of these 1000 people is 67 inches. But for one reason or another, you can only survey 50 of them. It’s very, very unlikely that the average height of this 50-person sample is exactly 67 inches. Most likely, it’s a little bit bigger (an overestimation) or a little bit smaller (an underestimation).

This is all a little discouraging, but there’s some good news. First, if you’re looking at something like the average, then as long as your sample meets a few key assumptions—i.e., it’s random and representative—your sample statistic is at the very least not “biased”: on average, it’s not more likely to overestimate or underestimate your population parameter. Second, and relatedly, the Central Limit Theorem (CLT) tells us that if you were to conduct many of these samples, the distribution of sample statistics would actually form a normal distribution around the population parameter. The reason that’s good news is it allows us to do all sorts of complicated statistics intended to make generalizations about the underlying population. And third: the bigger the sample, the smaller the sampling error. On average, a sample of 100 people is going to give you a better estimate of your population’s average height than a sample of 50 people. Something that surprises many newcomers to statistics is that—again, provided these assumptions are met—you can often get pretty precise estimates with relatively small sample sizes; more important than how many people you sample is how you sample them in the first place.

Unfortunately, that’s where the bad news comes in. Foundational to the principle of a good sample is that it’s a random, representative sample of the population. “Random” means that any given member of that population is equally likely to be a part of that sample. “Representative” means that the sample resembles the population along key dimensions that might impinge on one’s research question. That include things like race, gender, and socioeconomic status, but might also include dimensions like political affiliation and native language—figuring out what’s relevant is often a challenge unto itself.

These two criteria are important for making sure your research is externally valid—i.e., that the inferences you draw from a sample generalize to the population. The problem, as I’ve written before, is that those criteria are rarely met. Studies on human psychology and decision-making disproportionately draw from so-called “WEIRD” populations (Western, Educated, Industrialized, Rich, and Democratic), often focusing specifically on undergraduates at major research universities. Platforms like Amazon Mechanical Turk have helped somewhat—Mechanical Turk workers tend to be more representative of at least the United States population than college undergraduates—but raise problems of their own in that these workers are essentially professional survey takers.

These are the challenges facing modern human behavioral research. And as I mentioned at the beginning, they apply not just to academics but to anyone conducting market research or collecting training data for machine learning systems.

The reason I’m belaboring these points is that they’re critical background for thinking about how and where LLMs come into the picture.

Can LLMs augment psycholinguistic datasets?

I’m a psycholinguist by training—so naturally, the kinds of datasets I’m interested in contain things like human judgments about the meaning of different words or sentences. For example, a recently released dataset of iconicity judgments contains ratings of how much different words sound like what they mean (e.g., “buzz” is reasonably iconic). There are lots of datasets of this kind, some of which I’ve helped create, and collectively they’ve gone a long way towards helping psycholinguists build theories of the human mind.

I wanted to know to what extent these datasets could be augmented by LLMs. That is, how well would LLM-generated responses approximate human responses?

To test this question, I selected a number of pre-existing psycholinguistic datasets, each tapping into different dimensions of meaning. Some of these datasets contained judgments about how related or similar different words were; others contained judgments about how much a given word (e.g., “market”) related to different sensory modalities (e.g., smell vs. vision) in different contexts (e.g., a “fish market” vs. a “stock market”). I then prompted GPT-4 to generate judgments for each of the items in each dataset, using virtually identical instructions as those that were originally presented to human participants.

To illustrate how this works, let’s consider a simple example. Imagine that the LLM is presented with the following prompt:

How concrete is the word “table” in the phrase “wooden table”? Please rate concreteness on a scale from 1 (very abstract) to 5 (very concrete).

The LLM’s goal is to predict the next token, so it will assign probabilities to all the tokens in its vocabulary. If it’s a good LLM, numbers like “1” or “5” will be much more likely than random words like “desalinization”. And if it’s a really good LLM, “5” will be more likely to “1”, since “wooden table” describes a concrete concept. I sampled the most likely token, i.e., whichever word the LLM thought was most likely.

After collecting all the ratings in this manner, I calculated the correlation between GPT-4’s ratings and the human ratings.

The results were pretty striking in some cases, with correlations over 0.8 (generally considered a “strong” correlation by most statisticians). That means that LLM-generated judgments approximated aggregate human judgments quite closely.

But one question that arises here is how that compares to individual humans. People sometimes disagree, obviously, so individual human ratings aren’t always perfectly aligned with each other. To measure this, I used a commonly used approach called leave-one-out correlation. The basic premise of that is as follows. Let’s say there are 10 ratings for each word in a dataset (i.e., from 10 different humans). “Leave-one-out” means that I systematically remove each rater from the dataset, compute the average rating using the remaining 9 ratings, then calculate how correlated those new averages are with the rater I just removed. If I do this across all 10 raters, this gives us a measure of the average inter-rater agreement, i.e., between individual humans and the average response of multiple humans.1

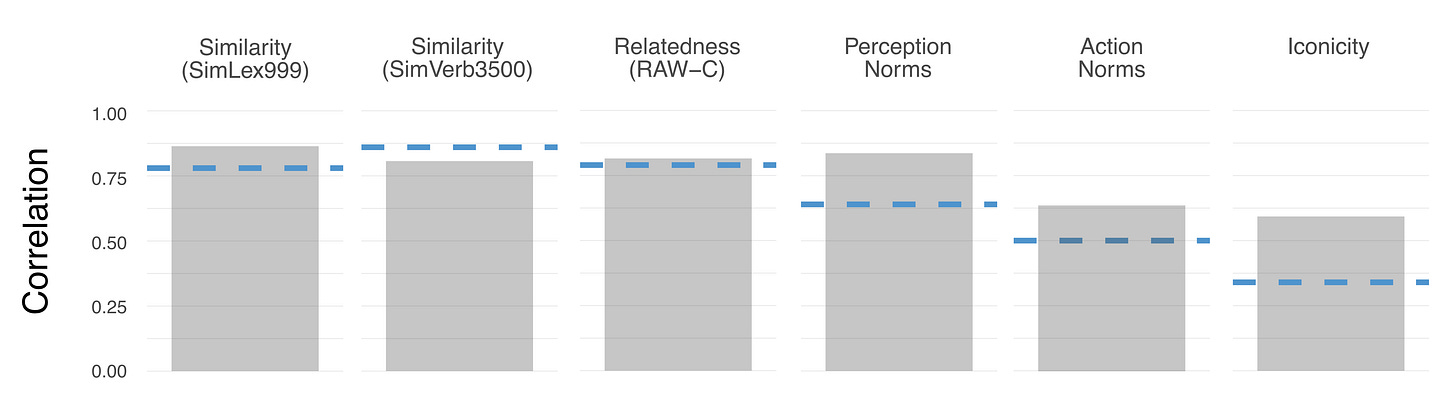

For the majority of the datasets I looked at, this measure of inter-rater agreement was actually lower than the correlation between LLM-generated responses and the average human rating. That is, LLM-generated ratings were more like the average ratings of multiple humans than any given human rating was. Those results are displayed in the figure below. Note that in all datasets except one, the gray bars are higher than the corresponding blue dashed line.

That should surprise or even shock us! Without any prompt engineering or fine-tuning, zero-shot prompting of GPT-4 yielded responses that approximated average human judgments better than many individual humans. It’s possible that even better performance could be achieved with a few well-chosen examples or a different prompt.

But these results might also seem a little confusing. Some might be tempted to ask the question: does this mean LLMs are somehow more “humanlike” than individual humans? I don’t think so—the average human judgment is not more human than any given human judgment!—but it is very impressive. Understanding how this happened might relate to the notion of the “wisdom of the crowd”.

Sampling and the “wisdom of the crowd”

Suppose 100 people are asked to guess how many balls are in a glass container. It’s very unlikely that any of those people will be correct, though certainly, some will be closer to the true value than others. What’s more interesting about this is that in general, the average of those 100 guesses tends to be considerably closer to the true value than the majority of the individual guesses. This is an example of what’s called the “wisdom of the crowd”.

The reason this works relates to our earlier discussion of sampling. Assuming those 100 guesses aren’t systematically biased in some way, then the number of people overestimating the true value will be about the same as the number of people underestimating it. Further, those errors are—on average—likely to be about the same in magnitude. Putting that altogether, this means that the average of all those guesses will collapse across those errors (i.e., the errors “wash out”) and converge pretty close to the true value.

In more recent years, there’s also been some work on the wisdom of the “inner crowd”. It turns out that in some circumstances, the average of multiple guesses from the same individual are also closer to the true value. This is generally taken as reflecting a “probabilistic” cognitive model, in which people don’t represent outcomes as discrete, binary events, but rather as probability distributions.

There are some really fascinating implications to the wisdom of the crowd—both outer and inner. At least when it comes to problems with a well-defined answer, it suggests that the aggregate of lots of opinions about that answer is genuinely informative about its true value. (This is the foundation of debates about how informative, exactly, stock market prices are about current and future events.)

It also relates directly to the role LLMs might play as survey-takers.

Are LLMs “the crowd”?

LLMs are trained on lots of data—much more than humans. GPT-3, for example, was trained on hundreds of billions of tokens, while human children encounter about 100 million words by age 10. That is, GPT-3 encounters over 1000x more words than any given human child.2

What does this mean about the ratings (e.g., concreteness, similarity) an LLM assigns to different words?

Those ratings are in some sense an aggregate of all the data an LLM has seen about that word, as well as similar words or similar contexts. That’s true for humans, too, of course. But the key difference is scale: an LLM is dealing with over 1000x more linguistic data than a human rater. From this perspective, an LLM’s guess represents a larger “sample”.

Perhaps it’s unsurprising, then, that on average, LLM ratings tend to be more correlated with the human average than most individual human ratings. From this perspective, LLMs capture the “wisdom of the crowd”: they’re the voice of many as opposed to the voice of one.

But I insist that it is surprising. Humans, after all, don’t solely encounter words. If a person is asked to rate the concreteness of “wooden table”, that doesn’t just think about all the times they’ve encountered the word “table”; they probably conjure up specific sensory associations—memories of tables they’ve sat at, tables they’ve spilled coffee on, tables over which they’ve enjoyed long chats with a friend. That’s data too, and it’s not data that most LLMs have access to. (Though that’s changing now, with the advent of more multimodal LLMs.)

Does that mean those other experiences simply irrelevant or at minimum, redundant, with the kind of information LLMs encounter in language? It’s impossible to say definitively, but at least when it comes to ratings of a word’s concreteness, the results I’ve presented here suggest that language is mostly all you need.

What lies ahead: pitfalls and promises

Does this mean that behavioral researchers should eschew human behavioral data entirely?

It’s certainly tempting. LLMs are, after all, considerably cheaper (and often faster) than paying human participants. And if empirically equivalent (or at least highly correlated) results can be achieved, why bother with humans?

But right now, my answer is that we ought to proceed with caution—for a couple of reasons.

First, it’s simply premature. I’ve presented data from only seven psycholinguistic datasets. These results are compelling (in my opinion), but they’re by no means definitive. We don’t know whether LLMs would do quite so well for other kinds of behavioral surveys, or even other psycholinguistic variables. It’s also possible these results are an artifact of “data contamination”: perhaps the LLM was trained on the exact datasets it’s being tested on. If that’s true, then the fact that the LLM can regurgitate those ratings isn’t particularly impressive or surprising. (I’m relatively confident that at least some of the results are not the result of data contamination, and the full manuscript explains why—but you can never be 100% certain.3) At minimum we’d want to do a lot more testing before

Second, there are some thorny philosophical questions to work out. Towards the beginning of this post, I mentioned that samples should be random and representative if you want to generalize from that sample to the population of interest. It’s well-documented at this point that LLMs are not trained on a random, representative slice of the population. Most obviously, LLMs like GPT-4 are trained primarily (though not entirely) on English; but there are lots of languages in the world! Even within English, LLMs are trained on a biased sample of language users: there’s good evidence that Internet forums like Reddit skew heavily male (and young); sites like Twitter skew liberal (and also young). So these LLMs are disproportionately exposed to certain viewpoints or “voices”, while others are systematically excluded from the training data. Remember that when it comes to sampling, how you sample matters more than how much. That’s precisely the point made by Emily Bender and others in their 2021 paper: a dataset’s size does not guarantee its diversity or representativeness. Very recent work illustrates this point more directly: GPT is WEIRD.

Of course, readers may (correctly) point out that human samples aren’t exactly diverse or representative either. I’m not actually sure whether the samples used to create these psycholinguistic datasets are more or less representative than the linguistic data used to train LLMs; an empirical analysis of this would be really helpful for the field. But either way, I agree with this point, and I think it points towards exercising caution when generalizing from human samples as well.

A third concern, which relates to the second, is that excessive reliance on LLM-generated data could lead to a kind of “drift” over time, which we wouldn’t notice because we’re never double-checking that data against a human gold standard. This is roughly analogous to the argument I presented in an older post, where I suggested that “auto-complete for everything” might well terraform the very structure of language itself. This is very concerning to me, and again, suggests that we ought to be cautious: if we do integrate LLMs into our dataset creation process, we should (at least for now) compare LLM-generated responses against some kind of human benchmark to ensure that no drift is occurring.

All of which brings me to the potential promise of this approach. As I mentioned, it’s much cheaper to collect data from an LLM. And at least in many academic departments, money’s hard to come by. The money a researcher saves paying participants could be used to pay a graduate student or even a postdoctoral researcher; it could also be used to pay open access fees to make one’s research and data publicly available. This approach is also considerably faster; and if that speed doesn’t come at the cost of quality (that’s an important “if”), that means more research is getting done overall. Those are concrete, tangible goods.

If this is a path researchers end up going down—in psycholinguists or in other fields—I think more theoretical and empirical work needs to be done delineating the boundary conditions of this approach. When is it appropriate? For which tasks are LLM-generated norms statistically biased? For which tasks are LLM-generated norms more correlated with the population parameter than individual human ratings? Right now, there’s a serious dearth of evidence on the issue one way or the other. My recent work is an attempt to provide some of that evidence, but I hope others follow suit.

The full paper also does a few other analyses, including a substitution analysis I personally think is very cool; if you’re interested, you can find the publicly available preprint here. The tl;dr is that replacing human-generated norms with LLM-generated norms in a statistical model doesn’t really change any important results.

The fact that GPT-3 needs so much data could be viewed as a limitation. And indeed, it is remarkably how much humans can do with such a relative paucity of input.

In brief: 1) several of the datasets were released after GPT-4’s alleged training data cutoff; and 2) for the newly published iconicity dataset, I asked whether GPT-4 was relatively more successful on rating the iconicity of words that existed in previously published iconicity datasets—it wasn’t.

great article, thought provoking